kriegaex opened a new pull request #355:

URL: https://github.com/apache/maven-surefire/pull/355

This PR is a follow-up on #354.

Done already:

- Renamed `Surefire1881` to `Surefire1881IT`, because with the wrong name

the test was not even executed before according to the CI build logs

- Now in addition to the Java agent logging IT executed in Failsafe, there

also is a JVM logging IT executed in Surefire. Both tests were in different

branches of https://github.com/kriegaex/Maven_Surefire_PrintToConsoleProblems.

**TODO:** I cherry-picked the tests back on the commit before the merge of

SUREFIRE-1881 in order to verify that when a JVM hang occurs, the tests

actually time out and kill the forked JVMs. The timeouts do actually occur,

both for both test cases (JVM logging, Java agent) the JVMs hang:

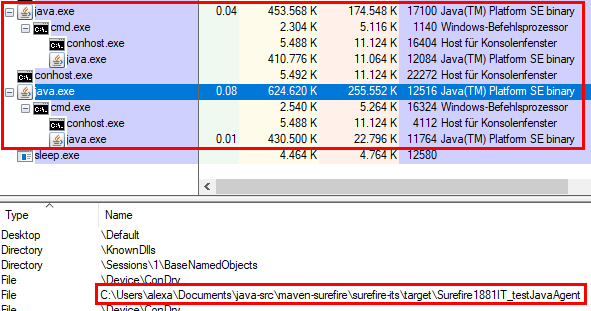

In the screenshot above, you see two process groups still running

indefinitely until killed manually, long after the test timeouts occurred and

the build is finished. Per hanging test, you even see two JVMs, one for Maven

and one forked by Surefire/Failsafe. All in all, per hanging test we even have

4 processes (2 JVMs, one shell and one console host) on Windows. Probably on

Linux is it similar.

This means that simple JUnit test timeouts are not good enough to clean up

processes in case of hanging tests, which could always occur, either here due

to regressions or uncovered edge cases or in other ITs due to other types of

errors. Both on developer workstations and on CI servers like Jira, this can

quickly deplete resources.

@Tibor17 and everyone else, do you have any ideas how to clean up the zombie

processes correctly?

--

This is an automated message from the Apache Git Service.

To respond to the message, please log on to GitHub and use the

URL above to go to the specific comment.

To unsubscribe, e-mail: [email protected]

For queries about this service, please contact Infrastructure at:

[email protected]