This is an automated email from the ASF dual-hosted git repository.

zjffdu pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/zeppelin.git

The following commit(s) were added to refs/heads/master by this push:

new 6ff782e [ZEPPELIN-4767]. Update and add more flink tutorial notes

6ff782e is described below

commit 6ff782efa55a8d06cf754687651d4350a301236d

Author: Jeff Zhang <zjf...@apache.org>

AuthorDate: Thu Apr 30 11:55:58 2020 +0800

[ZEPPELIN-4767]. Update and add more flink tutorial notes

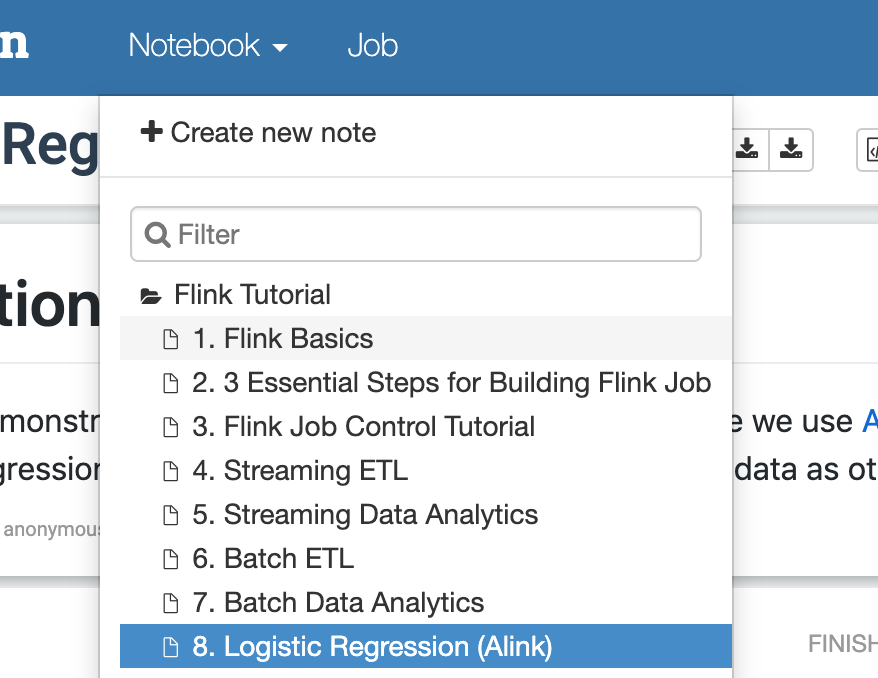

### What is this PR for?

This PR is to update and add more flink tutorial notes. After this PR,

there will be 8 flink tutorials notes. I also add number for each note so that

beginner users can know which note should read first.

### What type of PR is it?

[ Improvement ]

### Todos

* [ ] - Task

### What is the Jira issue?

* https://issues.apache.org/jira/browse/ZEPPELIN-4767

### How should this be tested?

* CI pass

### Screenshots (if appropriate)

### Questions:

* Does the licenses files need update? No

* Is there breaking changes for older versions? No

* Does this needs documentation? No

Author: Jeff Zhang <zjf...@apache.org>

Closes #3756 from zjffdu/ZEPPELIN-4767 and squashes the following commits:

ddda367fc [Jeff Zhang] [ZEPPELIN-4767]. Update and add more flink tutorial

notes

---

...2YS7PCE.zpln => 1. Flink Basics_2F2YS7PCE.zpln} | 45 +-

...ial Steps for Building Flink Job_2F7SKEHPA.zpln | 658 +++++++++++++++++++++

.../3. Flink Job Control Tutorial_2F5RKHCDV.zpln | 435 ++++++++++++++

...D56B9B.zpln => 4. Streaming ETL_2EYD56B9B.zpln} | 113 ++--

.../5. Streaming Data Analytics_2EYT7Q6R8.zpln | 303 ++++++++++

..._2EW19CSPA.zpln => 6. Batch ETL_2EW19CSPA.zpln} | 153 +++--

...zpln => 7. Batch Data Analytics_2EZ9G3JJU.zpln} | 356 ++++-------

.../8. Logistic Regression (Alink)_2F4HJNWVN.zpln | 369 ++++++++++++

.../Streaming Data Analytics_2EYT7Q6R8.zpln | 270 ---------

9 files changed, 2082 insertions(+), 620 deletions(-)

diff --git a/notebook/Flink Tutorial/Flink Basic_2F2YS7PCE.zpln

b/notebook/Flink Tutorial/1. Flink Basics_2F2YS7PCE.zpln

similarity index 95%

rename from notebook/Flink Tutorial/Flink Basic_2F2YS7PCE.zpln

rename to notebook/Flink Tutorial/1. Flink Basics_2F2YS7PCE.zpln

index 63904f0..6abd5b0 100644

--- a/notebook/Flink Tutorial/Flink Basic_2F2YS7PCE.zpln

+++ b/notebook/Flink Tutorial/1. Flink Basics_2F2YS7PCE.zpln

@@ -2,23 +2,24 @@

"paragraphs": [

{

"title": "Introduction",

- "text": "%md\n\n[Apache Flink](https://flink.apache.org/) is a framework

and distributed processing engine for stateful computations over unbounded and

bounded data streams. This is Flink tutorial for runninb classical wordcount in

both batch and streaming mode. \n\n",

+ "text": "%md\n\n# Introduction\n\n[Apache

Flink](https://flink.apache.org/) is a framework and distributed processing

engine for stateful computations over unbounded and bounded data streams. This

is Flink tutorial for runninb classical wordcount in both batch and streaming

mode. \n\nThere\u0027re 3 things you need to do before using flink in

zeppelin.\n\n* Download [Flink 1.10](https://flink.apache.org/downloads.html)

for scala 2.11 (Only scala-2.11 is supported, scala-2.12 is not [...]

"user": "anonymous",

- "dateUpdated": "2020-02-06 22:14:18.226",

+ "dateUpdated": "2020-04-29 22:14:29.556",

"config": {

"colWidth": 12.0,

"fontSize": 9.0,

"enabled": true,

"results": {},

"editorSetting": {

- "language": "text",

- "editOnDblClick": false,

+ "language": "markdown",

+ "editOnDblClick": true,

"completionKey": "TAB",

- "completionSupport": true

+ "completionSupport": false

},

- "editorMode": "ace/mode/text",

- "title": true,

- "editorHide": true

+ "editorMode": "ace/mode/markdown",

+ "title": false,

+ "editorHide": true,

+ "tableHide": false

},

"settings": {

"params": {},

@@ -29,7 +30,7 @@

"msg": [

{

"type": "HTML",

- "data": "\u003cdiv

class\u003d\"markdown-body\"\u003e\n\u003cp\u003e\u003ca

href\u003d\"https://flink.apache.org/\"\u003eApache Flink\u003c/a\u003e is a

framework and distributed processing engine for stateful computations over

unbounded and bounded data streams. This is Flink tutorial for runninb

classical wordcount in both batch and streaming

mode.\u003c/p\u003e\n\n\u003c/div\u003e"

+ "data": "\u003cdiv

class\u003d\"markdown-body\"\u003e\n\u003ch1\u003eIntroduction\u003c/h1\u003e\n\u003cp\u003e\u003ca

href\u003d\"https://flink.apache.org/\"\u003eApache Flink\u003c/a\u003e is a

framework and distributed processing engine for stateful computations over

unbounded and bounded data streams. This is Flink tutorial for runninb

classical wordcount in both batch and streaming

mode.\u003c/p\u003e\n\u003cp\u003eThere\u0026rsquo;re 3 things you need to do

before using [...]

}

]

},

@@ -38,15 +39,15 @@

"jobName": "paragraph_1580997898536_-1239502599",

"id": "paragraph_1580997898536_-1239502599",

"dateCreated": "2020-02-06 22:04:58.536",

- "dateStarted": "2020-02-06 22:14:15.858",

- "dateFinished": "2020-02-06 22:14:17.319",

+ "dateStarted": "2020-04-29 22:14:29.560",

+ "dateFinished": "2020-04-29 22:14:29.612",

"status": "FINISHED"

},

{

"title": "Batch WordCount",

"text": "%flink\n\nval data \u003d benv.fromElements(\"hello world\",

\"hello flink\", \"hello hadoop\")\ndata.flatMap(line \u003d\u003e

line.split(\"\\\\s\"))\n .map(w \u003d\u003e (w, 1))\n

.groupBy(0)\n .sum(1)\n .print()\n",

"user": "anonymous",

- "dateUpdated": "2020-02-25 11:34:15.508",

+ "dateUpdated": "2020-04-29 10:57:40.471",

"config": {

"colWidth": 6.0,

"fontSize": 9.0,

@@ -70,7 +71,7 @@

"msg": [

{

"type": "TEXT",

- "data": "\u001b[1m\u001b[34mdata\u001b[0m:

\u001b[1m\u001b[32morg.apache.flink.api.scala.DataSet[String]\u001b[0m \u003d

org.apache.flink.api.scala.DataSet@5883980f\n(flink,1)\n(hadoop,1)\n(hello,3)\n(world,1)\n"

+ "data": "\u001b[1m\u001b[34mdata\u001b[0m:

\u001b[1m\u001b[32morg.apache.flink.api.scala.DataSet[String]\u001b[0m \u003d

org.apache.flink.api.scala.DataSet@177908f2\n(flink,1)\n(hadoop,1)\n(hello,3)\n(world,1)\n"

}

]

},

@@ -79,15 +80,15 @@

"jobName": "paragraph_1580998080340_1531975932",

"id": "paragraph_1580998080340_1531975932",

"dateCreated": "2020-02-06 22:08:00.340",

- "dateStarted": "2020-02-25 11:34:15.520",

- "dateFinished": "2020-02-25 11:34:41.976",

+ "dateStarted": "2020-04-29 10:57:40.495",

+ "dateFinished": "2020-04-29 10:57:56.468",

"status": "FINISHED"

},

{

"title": "Streaming WordCount",

"text": "%flink\n\nval data \u003d senv.fromElements(\"hello world\",

\"hello flink\", \"hello hadoop\")\ndata.flatMap(line \u003d\u003e

line.split(\"\\\\s\"))\n .map(w \u003d\u003e (w, 1))\n .keyBy(0)\n .sum(1)\n

.print\n\nsenv.execute()",

"user": "anonymous",

- "dateUpdated": "2020-02-25 11:34:43.530",

+ "dateUpdated": "2020-04-29 10:58:47.117",

"config": {

"colWidth": 6.0,

"fontSize": 9.0,

@@ -111,7 +112,7 @@

"msg": [

{

"type": "TEXT",

- "data": "\u001b[1m\u001b[34mdata\u001b[0m:

\u001b[1m\u001b[32morg.apache.flink.streaming.api.scala.DataStream[String]\u001b[0m

\u003d

org.apache.flink.streaming.api.scala.DataStream@3385fb69\n\u001b[1m\u001b[34mres2\u001b[0m:

\u001b[1m\u001b[32morg.apache.flink.streaming.api.datastream.DataStreamSink[(String,

Int)]\u001b[0m \u003d

org.apache.flink.streaming.api.datastream.DataStreamSink@348a8146\n\u001b[1m\u001b[34mres3\u001b[0m:

\u001b[1m\u001b[32morg.apache.flink.api.common [...]

+ "data": "\u001b[1m\u001b[34mdata\u001b[0m:

\u001b[1m\u001b[32morg.apache.flink.streaming.api.scala.DataStream[String]\u001b[0m

\u003d

org.apache.flink.streaming.api.scala.DataStream@614839e8\n\u001b[1m\u001b[34mres2\u001b[0m:

\u001b[1m\u001b[32morg.apache.flink.streaming.api.datastream.DataStreamSink[(String,

Int)]\u001b[0m \u003d

org.apache.flink.streaming.api.datastream.DataStreamSink@1ead6506\n(hello,1)\n(world,1)\n(hello,2)\n(flink,1)\n(hello,3)\n(hadoop,1)\n\u001b[1m\u00

[...]

}

]

},

@@ -120,8 +121,8 @@

"jobName": "paragraph_1580998084555_-697674675",

"id": "paragraph_1580998084555_-697674675",

"dateCreated": "2020-02-06 22:08:04.555",

- "dateStarted": "2020-02-25 11:34:43.540",

- "dateFinished": "2020-02-25 11:34:45.863",

+ "dateStarted": "2020-04-29 10:58:47.129",

+ "dateFinished": "2020-04-29 10:58:49.108",

"status": "FINISHED"

},

{

@@ -186,17 +187,15 @@

"status": "READY"

}

],

- "name": "Flink Basic",

+ "name": "1. Flink Basics",

"id": "2F2YS7PCE",

"defaultInterpreterGroup": "spark",

"version": "0.9.0-SNAPSHOT",

- "permissions": {},

"noteParams": {},

"noteForms": {},

"angularObjects": {},

"config": {

"isZeppelinNotebookCronEnable": false

},

- "info": {},

- "path": "/Flink Tutorial/Flink Basic"

+ "info": {}

}

\ No newline at end of file

diff --git a/notebook/Flink Tutorial/2. 3 Essential Steps for Building Flink

Job_2F7SKEHPA.zpln b/notebook/Flink Tutorial/2. 3 Essential Steps for Building

Flink Job_2F7SKEHPA.zpln

new file mode 100644

index 0000000..03f8518

--- /dev/null

+++ b/notebook/Flink Tutorial/2. 3 Essential Steps for Building Flink

Job_2F7SKEHPA.zpln

@@ -0,0 +1,658 @@

+{

+ "paragraphs": [

+ {

+ "title": "Introduction",

+ "text": "%md\n\n# Introduction\n\n\nTypically there\u0027re 3 essential

steps for building one flink job. And each step has its favorite tools.\n\n*

Define source/sink (SQL DDL)\n* Define data flow (Table Api / SQL)\n* Implement

business logic (UDF)\n\nThis tutorial demonstrate how to use build one typical

flink via these 3 steps and their favorite tools.\nIn this demo, we will do

real time analysis of cdn access data. First we read cdn access log from kafka

queue and do some proce [...]

+ "user": "anonymous",

+ "dateUpdated": "2020-04-28 14:47:07.249",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "markdown",

+ "editOnDblClick": true,

+ "completionKey": "TAB",

+ "completionSupport": false

+ },

+ "editorMode": "ace/mode/markdown",

+ "title": false,

+ "editorHide": true,

+ "tableHide": false

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "HTML",

+ "data": "\u003cdiv

class\u003d\"markdown-body\"\u003e\n\u003ch1\u003eIntroduction\u003c/h1\u003e\n\u003cp\u003eTypically

there\u0026rsquo;re 3 essential steps for building one flink job. And each

step has its favorite

tools.\u003c/p\u003e\n\u003cul\u003e\n\u003cli\u003eDefine source/sink (SQL

DDL)\u003c/li\u003e\n\u003cli\u003eDefine data flow (Table Api /

SQL)\u003c/li\u003e\n\u003cli\u003eImplement business logic

(UDF)\u003c/li\u003e\n\u003c/ul\u003e\n\u003cp\u003eThis tuto [...]

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1587965294481_785664297",

+ "id": "paragraph_1587965294481_785664297",

+ "dateCreated": "2020-04-27 13:28:14.481",

+ "dateStarted": "2020-04-28 14:47:07.249",

+ "dateFinished": "2020-04-28 14:47:07.271",

+ "status": "FINISHED"

+ },

+ {

+ "title": "Configuration",

+ "text": "%flink.conf\n\n# This example use kafka as source and mysql as

sink, so you need to specify flink kafka connector and flink jdbc connector

first.\n\nflink.execution.packages\torg.apache.flink:flink-connector-kafka_2.11:1.10.0,org.apache.flink:flink-connector-kafka-base_2.11:1.10.0,org.apache.flink:flink-json:1.10.0,org.apache.flink:flink-jdbc_2.11:1.10.0,mysql:mysql-connector-java:5.1.38\n\n#

Set taskmanager.memory.segment-size to be the smallest value just for this

demo, [...]

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 15:07:01.902",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "text",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/text",

+ "title": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": []

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1585734329697_1695781588",

+ "id": "paragraph_1585734329697_1695781588",

+ "dateCreated": "2020-04-01 17:45:29.697",

+ "dateStarted": "2020-04-29 15:07:01.908",

+ "dateFinished": "2020-04-29 15:07:01.985",

+ "status": "FINISHED"

+ },

+ {

+ "title": "Define source table",

+ "text": "%flink.ssql\n\nDROP table if exists cdn_access_log;\n\nCREATE

TABLE cdn_access_log (\n uuid VARCHAR,\n client_ip VARCHAR,\n request_time

BIGINT,\n response_size BIGINT,\n uri VARCHAR,\n event_ts BIGINT\n) WITH (\n

\u0027connector.type\u0027 \u003d \u0027kafka\u0027,\n

\u0027connector.version\u0027 \u003d \u0027universal\u0027,\n

\u0027connector.topic\u0027 \u003d \u0027cdn_events\u0027,\n

\u0027connector.properties.zookeeper.connect\u0027 \u003d

\u0027localhost:2181\u0027, [...]

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 15:07:03.989",

+ "config": {

+ "colWidth": 6.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "sql",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/sql",

+ "title": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "TEXT",

+ "data": "Table has been dropped.\nTable has been created.\n"

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1585733282496_767011327",

+ "id": "paragraph_1585733282496_767011327",

+ "dateCreated": "2020-04-01 17:28:02.496",

+ "dateStarted": "2020-04-29 15:07:03.999",

+ "dateFinished": "2020-04-29 15:07:16.317",

+ "status": "FINISHED"

+ },

+ {

+ "title": "Define sink table",

+ "text": "%flink.ssql\n\nDROP table if exists cdn_access_statistic;\n\n--

Please create this mysql table first in your mysql instance. Flink won\u0027t

create mysql table for you.\n\nCREATE TABLE cdn_access_statistic (\n province

VARCHAR,\n access_count BIGINT,\n total_download BIGINT,\n download_speed

DOUBLE\n) WITH (\n \u0027connector.type\u0027 \u003d \u0027jdbc\u0027,\n

\u0027connector.url\u0027 \u003d

\u0027jdbc:mysql://localhost:3306/flink_cdn\u0027,\n \u0027connector.table\u0

[...]

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 15:07:05.522",

+ "config": {

+ "colWidth": 6.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "sql",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/sql",

+ "title": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "TEXT",

+ "data": "Table has been dropped.\nTable has been created.\n"

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1585733896337_1928136072",

+ "id": "paragraph_1585733896337_1928136072",

+ "dateCreated": "2020-04-01 17:38:16.337",

+ "dateStarted": "2020-04-29 15:07:15.867",

+ "dateFinished": "2020-04-29 15:07:16.317",

+ "status": "FINISHED"

+ },

+ {

+ "title": "PyFlink UDF",

+ "text": "%flink.ipyflink\n\nimport re\nimport json\nfrom pyflink.table

import DataTypes\nfrom pyflink.table.udf import udf\nfrom urllib.parse import

quote_plus\nfrom urllib.request import urlopen\n\n# This UDF is to convert ip

address to country. Here we simply the logic just for demo

purpose.\n\n@udf(input_types\u003d[DataTypes.STRING()],

result_type\u003dDataTypes.STRING())\ndef ip_to_country(ip):\n\n countries

\u003d [\u0027USA\u0027, \u0027China\u0027, \u0027Japan\u0027, \u0 [...]

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 15:07:24.842",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "python",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/python",

+ "title": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": []

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1585733368214_-94290606",

+ "id": "paragraph_1585733368214_-94290606",

+ "dateCreated": "2020-04-01 17:29:28.214",

+ "dateStarted": "2020-04-29 15:07:24.848",

+ "dateFinished": "2020-04-29 15:07:29.435",

+ "status": "FINISHED"

+ },

+ {

+ "title": "Test UDF",

+ "text": "%flink.bsql\n\nselect ip_to_country(\u00272.10.01.1\u0027)",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 14:17:35.942",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {

+ "0": {

+ "graph": {

+ "mode": "table",

+ "height": 300.0,

+ "optionOpen": false,

+ "setting": {

+ "table": {

+ "tableGridState": {},

+ "tableColumnTypeState": {

+ "names": {

+ "EXPR$0": "string"

+ },

+ "updated": false

+ },

+ "tableOptionSpecHash":

"[{\"name\":\"useFilter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

filter for

columns\"},{\"name\":\"showPagination\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

pagination for better

navigation\"},{\"name\":\"showAggregationFooter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

a footer [...]

+ "tableOptionValue": {

+ "useFilter": false,

+ "showPagination": false,

+ "showAggregationFooter": false

+ },

+ "updated": false,

+ "initialized": false

+ }

+ },

+ "commonSetting": {}

+ }

+ }

+ },

+ "editorSetting": {

+ "language": "sql",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/sql",

+ "title": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "TABLE",

+ "data": "EXPR$0\nChina\n"

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1586844130766_-1152098073",

+ "id": "paragraph_1586844130766_-1152098073",

+ "dateCreated": "2020-04-14 14:02:10.770",

+ "dateStarted": "2020-04-29 14:17:35.947",

+ "dateFinished": "2020-04-29 14:17:42.991",

+ "status": "FINISHED"

+ },

+ {

+ "title": "PyFlink Table Api",

+ "text": "%flink.ipyflink\n\nt \u003d

st_env.from_path(\"cdn_access_log\")\\\n .select(\"uuid, \"\n

\"ip_to_country(client_ip) as country, \" \n \"response_size,

request_time\")\\\n .group_by(\"country\")\\\n .select(\n \"country,

count(uuid) as access_count, \" \n \"sum(response_size) as

total_download, \" \n \"sum(response_size) * 1.0 / sum(request_time)

as download_speed\")\n #.insert_into(\"cdn_access_statistic\")\n\n# z.show

[...]

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 14:19:00.530",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {

+ "0": {

+ "graph": {

+ "mode": "table",

+ "height": 300.0,

+ "optionOpen": false,

+ "setting": {

+ "table": {

+ "tableGridState": {},

+ "tableColumnTypeState": {

+ "names": {

+ "country": "string",

+ "access_count": "string",

+ "total_download": "string",

+ "download_speed": "string"

+ },

+ "updated": false

+ },

+ "tableOptionSpecHash":

"[{\"name\":\"useFilter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

filter for

columns\"},{\"name\":\"showPagination\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

pagination for better

navigation\"},{\"name\":\"showAggregationFooter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

a footer [...]

+ "tableOptionValue": {

+ "useFilter": false,

+ "showPagination": false,

+ "showAggregationFooter": false

+ },

+ "updated": false,

+ "initialized": false

+ },

+ "multiBarChart": {

+ "xLabelStatus": "default",

+ "rotate": {

+ "degree": "-45"

+ },

+ "stacked": true

+ }

+ },

+ "commonSetting": {},

+ "keys": [

+ {

+ "name": "country",

+ "index": 0.0,

+ "aggr": "sum"

+ }

+ ],

+ "groups": [],

+ "values": [

+ {

+ "name": "access_count",

+ "index": 1.0,

+ "aggr": "sum"

+ }

+ ]

+ },

+ "helium": {}

+ }

+ },

+ "editorSetting": {

+ "language": "python",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/python",

+ "savepointDir": "/tmp/flink_python",

+ "title": true,

+ "editorHide": false,

+ "tableHide": false

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1586617588031_-638632283",

+ "id": "paragraph_1586617588031_-638632283",

+ "dateCreated": "2020-04-11 23:06:28.034",

+ "dateStarted": "2020-04-29 14:18:43.436",

+ "dateFinished": "2020-04-29 14:19:09.852",

+ "status": "ABORT"

+ },

+ {

+ "title": "Scala Table Api",

+ "text": "%flink\n\nval t \u003d stenv.from(\"cdn_access_log\")\n

.select(\"uuid, ip_to_country(client_ip) as country, response_size,

request_time\")\n .groupBy(\"country\")\n .select( \"country, count(uuid)

as access_count, sum(response_size) as total_download, sum(response_size) *

1.0 / sum(request_time) as download_speed\")\n

//.insertInto(\"cdn_access_statistic\")\n\n// z.show will display the result in

zeppelin front end in table format, you can uncomment the above ins [...]

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 15:14:29.646",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {

+ "0": {

+ "graph": {

+ "mode": "multiBarChart",

+ "height": 300.0,

+ "optionOpen": false,

+ "setting": {

+ "table": {

+ "tableGridState": {},

+ "tableColumnTypeState": {

+ "names": {

+ "province": "string",

+ "access_count": "string",

+ "total_download": "string",

+ "download_speed": "string"

+ },

+ "updated": false

+ },

+ "tableOptionSpecHash":

"[{\"name\":\"useFilter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

filter for

columns\"},{\"name\":\"showPagination\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

pagination for better

navigation\"},{\"name\":\"showAggregationFooter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

a footer [...]

+ "tableOptionValue": {

+ "useFilter": false,

+ "showPagination": false,

+ "showAggregationFooter": false

+ },

+ "updated": false,

+ "initialized": false

+ },

+ "multiBarChart": {

+ "rotate": {

+ "degree": "-45"

+ },

+ "xLabelStatus": "default"

+ }

+ },

+ "commonSetting": {},

+ "keys": [

+ {

+ "name": "country",

+ "index": 0.0,

+ "aggr": "sum"

+ }

+ ],

+ "groups": [],

+ "values": [

+ {

+ "name": "access_count",

+ "index": 1.0,

+ "aggr": "sum"

+ }

+ ]

+ },

+ "helium": {}

+ }

+ },

+ "editorSetting": {

+ "language": "scala",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/scala",

+ "title": true,

+ "savepointDir": "/tmp/flink_scala",

+ "parallelism": "4",

+ "maxParallelism": "10"

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1585796091843_-1858464529",

+ "id": "paragraph_1585796091843_-1858464529",

+ "dateCreated": "2020-04-02 10:54:51.844",

+ "dateStarted": "2020-04-29 15:14:25.339",

+ "dateFinished": "2020-04-29 15:14:51.926",

+ "status": "ABORT"

+ },

+ {

+ "title": "Flink Sql",

+ "text": "%flink.ssql\n\ninsert into cdn_access_statistic\nselect

ip_to_country(client_ip) as country, \n count(uuid) as access_count, \n

sum(response_size) as total_download ,\n sum(response_size) * 1.0 /

sum(request_time) as download_speed\nfrom cdn_access_log\n group by

ip_to_country(client_ip)\n",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 15:12:26.431",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "sql",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/sql",

+ "savepointDir": "/tmp/flink_1",

+ "title": true,

+ "type": "update"

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1585757391555_145331506",

+ "id": "paragraph_1585757391555_145331506",

+ "dateCreated": "2020-04-02 00:09:51.558",

+ "dateStarted": "2020-04-29 15:07:30.418",

+ "dateFinished": "2020-04-29 15:12:22.104",

+ "status": "ABORT"

+ },

+ {

+ "text": "%md\n\n# Query sink table via jdbc interpreter\n\nYou can also

query the sink table (mysql) directly via jdbc interpreter. Here I will create

a jdbc interpreter named `mysql` and use it to query the sink table. Regarding

how to connect mysql in Zeppelin, refer this

[link](http://zeppelin.apache.org/docs/0.9.0-preview1/interpreter/jdbc.html#mysql)",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 12:02:12.920",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "markdown",

+ "editOnDblClick": true,

+ "completionKey": "TAB",

+ "completionSupport": false

+ },

+ "editorMode": "ace/mode/markdown",

+ "editorHide": true,

+ "tableHide": false

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "HTML",

+ "data": "\u003cdiv

class\u003d\"markdown-body\"\u003e\n\u003ch1\u003eQuery sink table via jdbc

interpreter\u003c/h1\u003e\n\u003cp\u003eYou can also query the sink table

(mysql) directly via jdbc interpreter. Here I will create a jdbc interpreter

named \u003ccode\u003emysql\u003c/code\u003e and use it to query the sink

table. Regarding how to connect mysql in Zeppelin, refer this \u003ca

href\u003d\"http://zeppelin.apache.org/docs/0.9.0-preview1/interpreter/jdbc.html#mysql\"\

[...]

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1587976725546_2073084823",

+ "id": "paragraph_1587976725546_2073084823",

+ "dateCreated": "2020-04-27 16:38:45.548",

+ "dateStarted": "2020-04-29 12:02:12.923",

+ "dateFinished": "2020-04-29 12:02:12.939",

+ "status": "FINISHED"

+ },

+ {

+ "title": "Query mysql",

+ "text": "%mysql\n\nselect * from flink_cdn.cdn_access_statistic",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 15:22:13.333",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {

+ "0": {

+ "graph": {

+ "mode": "multiBarChart",

+ "height": 300.0,

+ "optionOpen": false,

+ "setting": {

+ "table": {

+ "tableGridState": {},

+ "tableColumnTypeState": {

+ "names": {

+ "province": "string",

+ "access_count": "string",

+ "total_download": "string",

+ "download_speed": "string"

+ },

+ "updated": false

+ },

+ "tableOptionSpecHash":

"[{\"name\":\"useFilter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

filter for

columns\"},{\"name\":\"showPagination\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

pagination for better

navigation\"},{\"name\":\"showAggregationFooter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

a footer [...]

+ "tableOptionValue": {

+ "useFilter": false,

+ "showPagination": false,

+ "showAggregationFooter": false

+ },

+ "updated": false,

+ "initialized": false

+ },

+ "multiBarChart": {

+ "rotate": {

+ "degree": "-45"

+ },

+ "xLabelStatus": "default"

+ },

+ "stackedAreaChart": {

+ "rotate": {

+ "degree": "-45"

+ },

+ "xLabelStatus": "default"

+ },

+ "lineChart": {

+ "rotate": {

+ "degree": "-45"

+ },

+ "xLabelStatus": "default"

+ }

+ },

+ "commonSetting": {},

+ "keys": [

+ {

+ "name": "province",

+ "index": 0.0,

+ "aggr": "sum"

+ }

+ ],

+ "groups": [],

+ "values": [

+ {

+ "name": "access_count",

+ "index": 1.0,

+ "aggr": "sum"

+ }

+ ]

+ },

+ "helium": {}

+ }

+ },

+ "editorSetting": {

+ "language": "sql",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/sql",

+ "title": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1586931452339_-1281904044",

+ "id": "paragraph_1586931452339_-1281904044",

+ "dateCreated": "2020-04-15 14:17:32.345",

+ "dateStarted": "2020-04-29 14:19:42.915",

+ "dateFinished": "2020-04-29 14:19:43.472",

+ "status": "FINISHED"

+ },

+ {

+ "text": "%flink.ipyflink\n",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-27 16:38:45.464",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "python",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/python"

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1587115507009_250592635",

+ "id": "paragraph_1587115507009_250592635",

+ "dateCreated": "2020-04-17 17:25:07.009",

+ "status": "READY"

+ }

+ ],

+ "name": "2. 3 Essential Steps for Building Flink Job",

+ "id": "2F7SKEHPA",

+ "defaultInterpreterGroup": "flink",

+ "version": "0.9.0-SNAPSHOT",

+ "noteParams": {},

+ "noteForms": {},

+ "angularObjects": {},

+ "config": {

+ "isZeppelinNotebookCronEnable": false

+ },

+ "info": {}

+}

\ No newline at end of file

diff --git a/notebook/Flink Tutorial/3. Flink Job Control

Tutorial_2F5RKHCDV.zpln b/notebook/Flink Tutorial/3. Flink Job Control

Tutorial_2F5RKHCDV.zpln

new file mode 100644

index 0000000..ae80c38

--- /dev/null

+++ b/notebook/Flink Tutorial/3. Flink Job Control Tutorial_2F5RKHCDV.zpln

@@ -0,0 +1,435 @@

+{

+ "paragraphs": [

+ {

+ "title": "Introduction",

+ "text": "%md\n\n# Introduction\n\nThis tutorial is to demonstrate how to

do job control in flink (job submission/cancel/resume).\n2 steps:\n1. Create

custom data stream and register it as flink table. The custom data stream is a

simulated web access logs. \n2. Query this flink table (pv for each page type),

you can cancel it and then resume it again w/o savepoint.\n",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 15:23:45.078",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "markdown",

+ "editOnDblClick": true,

+ "completionKey": "TAB",

+ "completionSupport": false

+ },

+ "editorMode": "ace/mode/markdown",

+ "title": false,

+ "editorHide": true,

+ "tableHide": false

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "HTML",

+ "data": "\u003cdiv

class\u003d\"markdown-body\"\u003e\n\u003ch1\u003eIntroduction\u003c/h1\u003e\n\u003cp\u003eThis

tutorial is to demonstrate how to do job control in flink (job

submission/cancel/resume).\u003cbr /\u003e\n2

steps:\u003c/p\u003e\n\u003col\u003e\n\u003cli\u003eCreate custom data stream

and register it as flink table. The custom data stream is a simulated web

access logs.\u003c/li\u003e\n\u003cli\u003eQuery this flink table (pv for each

page type), you can canc [...]

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1587964310955_443124874",

+ "id": "paragraph_1587964310955_443124874",

+ "dateCreated": "2020-04-27 13:11:50.955",

+ "dateStarted": "2020-04-29 15:23:45.080",

+ "dateFinished": "2020-04-29 15:23:45.094",

+ "status": "FINISHED"

+ },

+ {

+ "title": "Register Data Source",

+ "text": "%flink \n\nimport

org.apache.flink.streaming.api.functions.source.SourceFunction\nimport

org.apache.flink.table.api.TableEnvironment\nimport

org.apache.flink.streaming.api.TimeCharacteristic\nimport

org.apache.flink.streaming.api.checkpoint.ListCheckpointed\nimport

java.util.Collections\nimport

scala.collection.JavaConversions._\n\nsenv.setStreamTimeCharacteristic(TimeCharacteristic.EventTime)\nsenv.enableCheckpointing(1000)\n\nval

data \u003d senv.addSource(new SourceFunc [...]

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 14:21:46.802",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "scala",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/scala",

+ "title": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "TEXT",

+ "data": "import

org.apache.flink.streaming.api.functions.source.SourceFunction\nimport

org.apache.flink.table.api.TableEnvironment\nimport

org.apache.flink.streaming.api.TimeCharacteristic\nimport

org.apache.flink.streaming.api.checkpoint.ListCheckpointed\nimport

java.util.Collections\nimport

scala.collection.JavaConversions._\n\u001b[1m\u001b[34mres1\u001b[0m:

\u001b[1m\u001b[32morg.apache.flink.streaming.api.scala.StreamExecutionEnvironment\u001b[0m

\u003d org.apache.flink. [...]

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1586733774605_1418179269",

+ "id": "paragraph_1586733774605_1418179269",

+ "dateCreated": "2020-04-13 07:22:54.605",

+ "dateStarted": "2020-04-29 14:21:46.817",

+ "dateFinished": "2020-04-29 14:21:55.214",

+ "status": "FINISHED"

+ },

+ {

+ "title": "Resume flink sql job without savepoint",

+ "text": "%flink.ssql(type\u003dupdate)\n\nselect url, count(1) as c from

log group by url",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 14:22:40.594",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {

+ "0": {

+ "graph": {

+ "mode": "table",

+ "height": 300.0,

+ "optionOpen": false,

+ "setting": {

+ "table": {

+ "tableGridState": {},

+ "tableColumnTypeState": {

+ "names": {

+ "url": "string",

+ "c": "string"

+ },

+ "updated": false

+ },

+ "tableOptionSpecHash":

"[{\"name\":\"useFilter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

filter for

columns\"},{\"name\":\"showPagination\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

pagination for better

navigation\"},{\"name\":\"showAggregationFooter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

a footer [...]

+ "tableOptionValue": {

+ "useFilter": false,

+ "showPagination": false,

+ "showAggregationFooter": false

+ },

+ "updated": false,

+ "initialized": false

+ },

+ "multiBarChart": {

+ "rotate": {

+ "degree": "-45"

+ },

+ "xLabelStatus": "default"

+ }

+ },

+ "commonSetting": {},

+ "keys": [

+ {

+ "name": "url",

+ "index": 0.0,

+ "aggr": "sum"

+ }

+ ],

+ "groups": [],

+ "values": [

+ {

+ "name": "pv",

+ "index": 1.0,

+ "aggr": "sum"

+ }

+ ]

+ },

+ "helium": {}

+ }

+ },

+ "editorSetting": {

+ "language": "sql",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/sql",

+ "type": "update",

+ "title": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "ERROR",

+ "msg": [

+ {

+ "type": "TABLE",

+ "data": "url\tc\nhome\t19\nproduct\t55\nsearch\t38\n"

+ },

+ {

+ "type": "TEXT",

+ "data": "Fail to run sql command: select url, count(1) as c from

log group by url\njava.io.IOException: Fail to run stream sql job\n\tat

org.apache.zeppelin.flink.sql.AbstractStreamSqlJob.run(AbstractStreamSqlJob.java:166)\n\tat

org.apache.zeppelin.flink.sql.AbstractStreamSqlJob.run(AbstractStreamSqlJob.java:101)\n\tat

org.apache.zeppelin.flink.FlinkStreamSqlInterpreter.callInnerSelect(FlinkStreamSqlInterpreter.java:90)\n\tat

org.apache.zeppelin.flink.FlinkSqlInterrpeter.call [...]

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1586847370895_154139610",

+ "id": "paragraph_1586847370895_154139610",

+ "dateCreated": "2020-04-14 14:56:10.896",

+ "dateStarted": "2020-04-29 14:22:40.600",

+ "dateFinished": "2020-04-29 14:22:56.844",

+ "status": "ABORT"

+ },

+ {

+ "title": "Resume flink sql job from savepoint",

+ "text":

"%flink.ssql(type\u003dupdate,parallelism\u003d2,maxParallelism\u003d10,savepointDir\u003d/tmp/flink_a)\n\nselect

url, count(1) as pv from log group by url",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 14:24:26.768",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {

+ "0": {

+ "graph": {

+ "mode": "table",

+ "height": 300.0,

+ "optionOpen": false,

+ "setting": {

+ "table": {

+ "tableGridState": {},

+ "tableColumnTypeState": {

+ "names": {

+ "url": "string",

+ "pv": "string"

+ },

+ "updated": false

+ },

+ "tableOptionSpecHash":

"[{\"name\":\"useFilter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

filter for

columns\"},{\"name\":\"showPagination\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

pagination for better

navigation\"},{\"name\":\"showAggregationFooter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

a footer [...]

+ "tableOptionValue": {

+ "useFilter": false,

+ "showPagination": false,

+ "showAggregationFooter": false

+ },

+ "updated": false,

+ "initialized": false

+ }

+ },

+ "commonSetting": {}

+ }

+ }

+ },

+ "editorSetting": {

+ "language": "sql",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/sql",

+ "parallelism": "2",

+ "maxParallelism": "10",

+ "type": "update",

+ "select url": "select url",

+ "count(1": "count(1",

+ "savepointDir": "/tmp/flink_a",

+ "title": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "TABLE",

+ "data": "url\tpv\nhome\t500\nproduct\t1500\nsearch\t1000\n"

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1586733780533_1100270999",

+ "id": "paragraph_1586733780533_1100270999",

+ "dateCreated": "2020-04-13 07:23:00.533",

+ "dateStarted": "2020-04-29 14:24:26.772",

+ "dateFinished": "2020-04-29 14:29:07.320",

+ "status": "FINISHED"

+ },

+ {

+ "title": "Resume flink scala job from savepoint",

+ "text":

"%flink(parallelism\u003d1,maxParallelism\u003d10,savepointDir\u003d/tmp/flink_b)\n\nval

table \u003d stenv.sqlQuery(\"select url, count(1) as pv from log group by

url\")\n\nz.show(table, streamType\u003d\"update\")\n",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-15 16:11:54.114",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {

+ "0": {

+ "graph": {

+ "mode": "table",

+ "height": 300.0,

+ "optionOpen": false,

+ "setting": {

+ "table": {

+ "tableGridState": {},

+ "tableColumnTypeState": {

+ "names": {

+ "url": "string",

+ "pv": "string"

+ },

+ "updated": false

+ },

+ "tableOptionSpecHash":

"[{\"name\":\"useFilter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

filter for

columns\"},{\"name\":\"showPagination\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

pagination for better

navigation\"},{\"name\":\"showAggregationFooter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

a footer [...]

+ "tableOptionValue": {

+ "useFilter": false,

+ "showPagination": false,

+ "showAggregationFooter": false

+ },

+ "updated": false,

+ "initialized": false

+ }

+ },

+ "commonSetting": {}

+ }

+ }

+ },

+ "editorSetting": {

+ "language": "scala",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/scala",

+ "par": "par",

+ "parallelism": "1",

+ "maxParallelism": "10",

+ "savepointDir": "/tmp/flink_b",

+ "title": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "TABLE",

+ "data": "url\tpv\nhome\t34\nproduct\t100\nsearch\t68\n"

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1586733868269_783581378",

+ "id": "paragraph_1586733868269_783581378",

+ "dateCreated": "2020-04-13 07:24:28.269",

+ "dateStarted": "2020-04-15 15:40:02.218",

+ "dateFinished": "2020-04-15 15:40:27.915",

+ "status": "ABORT"

+ },

+ {

+ "title": "Resume flink python job from savepoint",

+ "text":

"%flink.ipyflink(parallelism\u003d1,maxParallelism\u003d10,savepointDir\u003d/tmp/flink_c)\n\ntable

\u003d st_env.sql_query(\"select url, count(1) as pv from log group by

url\")\n\nz.show(table, stream_type\u003d\"update\")",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-27 14:45:28.045",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {

+ "0": {

+ "graph": {

+ "mode": "table",

+ "height": 300.0,

+ "optionOpen": false,

+ "setting": {

+ "table": {

+ "tableGridState": {},

+ "tableColumnTypeState": {

+ "names": {

+ "url": "string",

+ "pv": "string"

+ },

+ "updated": false

+ },

+ "tableOptionSpecHash":

"[{\"name\":\"useFilter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

filter for

columns\"},{\"name\":\"showPagination\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

pagination for better

navigation\"},{\"name\":\"showAggregationFooter\",\"valueType\":\"boolean\",\"defaultValue\":false,\"widget\":\"checkbox\",\"description\":\"Enable

a footer [...]

+ "tableOptionValue": {

+ "useFilter": false,

+ "showPagination": false,

+ "showAggregationFooter": false

+ },

+ "updated": false,

+ "initialized": false

+ }

+ },

+ "commonSetting": {}

+ }

+ }

+ },

+ "editorSetting": {

+ "language": "python",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/python",

+ "parallelism": "1",

+ "maxParallelism": "10",

+ "savepointDir": "/tmp/flink_c",

+ "title": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "TABLE",

+ "data": "url\tpv\nhome\t17\nproduct\t49\nsearch\t34\n"

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1586754954622_-1794803125",

+ "id": "paragraph_1586754954622_-1794803125",

+ "dateCreated": "2020-04-13 13:15:54.623",

+ "dateStarted": "2020-04-15 15:40:29.046",

+ "dateFinished": "2020-04-15 15:40:49.333",

+ "status": "ABORT"

+ },

+ {

+ "text": "%flink.ssql\n",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-18 19:04:17.969",

+ "config": {},

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1587207857968_1997116221",

+ "id": "paragraph_1587207857968_1997116221",

+ "dateCreated": "2020-04-18 19:04:17.969",

+ "status": "READY"

+ }

+ ],

+ "name": "3. Flink Job Control Tutorial",

+ "id": "2F5RKHCDV",

+ "defaultInterpreterGroup": "flink",

+ "version": "0.9.0-SNAPSHOT",

+ "noteParams": {},

+ "noteForms": {},

+ "angularObjects": {},

+ "config": {

+ "isZeppelinNotebookCronEnable": false

+ },

+ "info": {}

+}

\ No newline at end of file

diff --git a/notebook/Flink Tutorial/Streaming ETL_2EYD56B9B.zpln

b/notebook/Flink Tutorial/4. Streaming ETL_2EYD56B9B.zpln

similarity index 57%

rename from notebook/Flink Tutorial/Streaming ETL_2EYD56B9B.zpln

rename to notebook/Flink Tutorial/4. Streaming ETL_2EYD56B9B.zpln

index d7d58f7..f18d0d1 100644

--- a/notebook/Flink Tutorial/Streaming ETL_2EYD56B9B.zpln

+++ b/notebook/Flink Tutorial/4. Streaming ETL_2EYD56B9B.zpln

@@ -2,9 +2,9 @@

"paragraphs": [

{

"title": "Overview",

- "text": "%md\n\nThis tutorial demonstrate how to use Flink do streaming

processing via its streaming sql + udf. In this tutorial, we read data from

kafka queue and do some simple processing (just filtering here) and then write

it back to another kafka queue. We use this

[docker](https://kafka-connect-datagen.readthedocs.io/en/latest/) to create

kafka cluster and source data \n\n",

+ "text": "%md\n\nThis tutorial demonstrate how to use Flink do streaming

processing via its streaming sql + udf. In this tutorial, we read data from

kafka queue and do some simple processing (just filtering here) and then write

it back to another kafka queue. We use this

[docker](https://zeppelin-kafka-connect-datagen.readthedocs.io/en/latest/) to

create kafka cluster and source data \n\n* Make sure you add the following ip

host name mapping to your hosts file, otherwise you may not [...]

"user": "anonymous",

- "dateUpdated": "2020-03-23 15:03:54.596",

+ "dateUpdated": "2020-04-27 13:44:26.806",

"config": {

"runOnSelectionChange": true,

"title": true,

@@ -14,13 +14,14 @@

"enabled": true,

"results": {},

"editorSetting": {

- "language": "text",

- "editOnDblClick": false,

+ "language": "markdown",

+ "editOnDblClick": true,

"completionKey": "TAB",

- "completionSupport": true

+ "completionSupport": false

},

- "editorMode": "ace/mode/text",

- "editorHide": true

+ "editorMode": "ace/mode/markdown",

+ "editorHide": true,

+ "tableHide": false

},

"settings": {

"params": {},

@@ -31,7 +32,7 @@

"msg": [

{

"type": "HTML",

- "data": "\u003cdiv

class\u003d\"markdown-body\"\u003e\n\u003cp\u003eThis tutorial demonstrate how

to use Flink do streaming processing via its streaming sql + udf. In this

tutorial, we read data from kafka queue and do some simple processing (just

filtering here) and then write it back to another kafka queue. We use this

\u003ca

href\u003d\"https://kafka-connect-datagen.readthedocs.io/en/latest/\"\u003edocker\u003c/a\u003e

to create kafka cluster and source data\u003c/p\u003e [...]

+ "data": "\u003cdiv

class\u003d\"markdown-body\"\u003e\n\u003cp\u003eThis tutorial demonstrate how

to use Flink do streaming processing via its streaming sql + udf. In this

tutorial, we read data from kafka queue and do some simple processing (just

filtering here) and then write it back to another kafka queue. We use this

\u003ca

href\u003d\"https://zeppelin-kafka-connect-datagen.readthedocs.io/en/latest/\"\u003edocker\u003c/a\u003e

to create kafka cluster and source data\u003 [...]

}

]

},

@@ -40,15 +41,51 @@

"jobName": "paragraph_1579054287919_-61477360",

"id": "paragraph_1579054287919_-61477360",

"dateCreated": "2020-01-15 10:11:27.919",

- "dateStarted": "2020-01-19 15:36:08.710",

- "dateFinished": "2020-01-19 15:36:18.597",

+ "dateStarted": "2020-04-27 11:49:46.116",

+ "dateFinished": "2020-04-27 11:49:46.128",

+ "status": "FINISHED"

+ },

+ {

+ "title": "Configure flink kafka connector",

+ "text": "%flink.conf\n\n# You need to run this paragraph first before

running any flink

code.\n\nflink.execution.packages\torg.apache.flink:flink-connector-kafka_2.11:1.10.0,org.apache.flink:flink-connector-kafka-base_2.11:1.10.0,org.apache.flink:flink-json:1.10.0",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 15:45:27.361",

+ "config": {

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "text",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/text",

+ "title": true

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": []

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1587959422055_1513725291",

+ "id": "paragraph_1587959422055_1513725291",

+ "dateCreated": "2020-04-27 11:50:22.055",

+ "dateStarted": "2020-04-29 15:45:27.366",

+ "dateFinished": "2020-04-29 15:45:27.369",

"status": "FINISHED"

},

{

"title": "Create kafka source table",

"text": "%flink.ssql\n\nDROP TABLE IF EXISTS source_kafka;\n\nCREATE

TABLE source_kafka (\n status STRING,\n direction STRING,\n event_ts

BIGINT\n) WITH (\n \u0027connector.type\u0027 \u003d \u0027kafka\u0027,

\n \u0027connector.version\u0027 \u003d \u0027universal\u0027,\n

\u0027connector.topic\u0027 \u003d \u0027generated.events\u0027,\n

\u0027connector.startup-mode\u0027 \u003d \u0027earliest-offset\u0027,\n

\u0027connector.properties.zookeeper.connect\u0027 [...]

"user": "anonymous",

- "dateUpdated": "2020-02-25 09:49:50.067",

+ "dateUpdated": "2020-04-29 15:45:29.234",

"config": {

"colWidth": 6.0,

"fontSize": 9.0,

@@ -83,15 +120,15 @@

"jobName": "paragraph_1578044987529_1240899810",

"id": "paragraph_1578044987529_1240899810",

"dateCreated": "2020-01-03 17:49:47.529",

- "dateStarted": "2020-02-25 09:49:50.077",

- "dateFinished": "2020-02-25 09:50:02.240",

+ "dateStarted": "2020-04-29 15:45:29.238",

+ "dateFinished": "2020-04-29 15:45:42.005",

"status": "FINISHED"

},

{

"title": "Create kafka sink table",

"text": "%flink.ssql\n\nDROP TABLE IF EXISTS sink_kafka;\n\nCREATE TABLE

sink_kafka (\n status STRING,\n direction STRING,\n event_ts

TIMESTAMP(3),\n WATERMARK FOR event_ts AS event_ts - INTERVAL \u00275\u0027

SECOND\n) WITH (\n \u0027connector.type\u0027 \u003d \u0027kafka\u0027,

\n \u0027connector.version\u0027 \u003d \u0027universal\u0027, \n

\u0027connector.topic\u0027 \u003d \u0027generated.events2\u0027,\n

\u0027connector.properties.zookeeper.connect [...]

"user": "anonymous",

- "dateUpdated": "2020-02-25 09:50:08.130",

+ "dateUpdated": "2020-04-29 15:45:30.663",

"config": {

"runOnSelectionChange": true,

"title": true,

@@ -126,15 +163,15 @@

"jobName": "paragraph_1578905686087_1273839451",

"id": "paragraph_1578905686087_1273839451",

"dateCreated": "2020-01-13 16:54:46.087",

- "dateStarted": "2020-02-25 09:50:08.136",

- "dateFinished": "2020-02-25 09:50:08.357",

+ "dateStarted": "2020-04-29 15:45:41.561",

+ "dateFinished": "2020-04-29 15:45:42.005",

"status": "FINISHED"

},

{

"title": "Transform the data in source table and write it to sink table",

"text": "%flink.ssql\n\ninsert into sink_kafka select status, direction,

cast(event_ts/1000000000 as timestamp(3)) from source_kafka where status

\u003c\u003e \u0027foo\u0027\n",

"user": "anonymous",

- "dateUpdated": "2020-02-25 09:50:10.681",

+ "dateUpdated": "2020-04-29 15:45:43.388",

"config": {

"runOnSelectionChange": true,

"title": true,

@@ -156,20 +193,33 @@

"params": {},

"forms": {}

},

+ "results": {

+ "code": "ERROR",

+ "msg": [

+ {

+ "type": "ANGULAR",

+ "data": "\u003ch1\u003eDuration: {{duration}} seconds\n"

+ },

+ {

+ "type": "TEXT",

+ "data": "Fail to run sql command: insert into sink_kafka select

status, direction, cast(event_ts/1000000000 as timestamp(3)) from source_kafka

where status \u003c\u003e \u0027foo\u0027\njava.io.IOException:

java.util.concurrent.ExecutionException:

org.apache.flink.client.program.ProgramInvocationException: Job failed (JobID:

9d350f962fffb020222af2bba3388912)\n\tat

org.apache.zeppelin.flink.FlinkSqlInterrpeter.callInsertInto(FlinkSqlInterrpeter.java:526)\n\tat

org.apache.zeppe [...]

+ }

+ ]

+ },

"apps": [],

"progressUpdateIntervalMs": 500,

"jobName": "paragraph_1578905715189_33634195",

"id": "paragraph_1578905715189_33634195",

"dateCreated": "2020-01-13 16:55:15.189",

- "dateStarted": "2020-02-25 09:50:10.751",

- "dateFinished": "2020-02-25 09:40:04.300",

+ "dateStarted": "2020-04-29 15:45:43.391",

+ "dateFinished": "2020-04-29 16:06:27.181",

"status": "ABORT"

},

{

"title": "Preview sink table result",

"text": "%flink.ssql(type\u003dupdate)\n\nselect * from sink_kafka order

by event_ts desc limit 10;",

"user": "anonymous",

- "dateUpdated": "2020-02-07 10:00:28.140",

+ "dateUpdated": "2020-04-29 15:28:01.122",

"config": {

"runOnSelectionChange": true,

"title": true,

@@ -269,32 +319,19 @@

"params": {},

"forms": {}

},

- "results": {

- "code": "ERROR",

- "msg": [

- {

- "type": "TABLE",

- "data": "status\tdirection\tevent_ts\nbar\tright\t2020-02-07

02:05:27.0\nbar\tleft\t2020-02-07 02:05:27.0\nbar\tleft\t2020-02-07

02:05:27.0\nbaz\tup\t2020-02-07 02:05:27.0\nbaz\tup\t2020-02-07

02:05:27.0\nbaz\tdown\t2020-02-07 02:05:27.0\nbaz\tleft\t2020-02-07

02:05:32.0\nbaz\tup\t2020-02-07 02:05:32.0\nbaz\tup\t2020-02-07

02:05:32.0\nbaz\tleft\t2020-02-07 02:05:32.0\n"

- },

- {

- "type": "TEXT",

- "data": "Fail to run sql command: select * from sink_kafka order

by event_ts desc limit 10\njava.io.IOException: Fail to run stream sql

job\n\tat

org.apache.zeppelin.flink.sql.AbstractStreamSqlJob.run(AbstractStreamSqlJob.java:164)\n\tat

org.apache.zeppelin.flink.FlinkStreamSqlInterpreter.callSelect(FlinkStreamSqlInterpreter.java:108)\n\tat

org.apache.zeppelin.flink.FlinkSqlInterrpeter.callCommand(FlinkSqlInterrpeter.java:203)\n\tat

org.apache.zeppelin.flink.FlinkSqlInterrpet [...]

- }

- ]

- },

"apps": [],

"progressUpdateIntervalMs": 500,

"jobName": "paragraph_1579058345516_-1005807622",

"id": "paragraph_1579058345516_-1005807622",

"dateCreated": "2020-01-15 11:19:05.518",

- "dateStarted": "2020-02-07 10:00:28.144",

- "dateFinished": "2020-02-07 10:05:39.084",

+ "dateStarted": "2020-04-29 15:28:01.131",

+ "dateFinished": "2020-04-29 15:28:15.162",

"status": "ABORT"

},

{

"text": "%flink.ssql\n",

"user": "anonymous",

- "dateUpdated": "2020-02-06 22:17:54.896",

+ "dateUpdated": "2020-04-29 15:27:31.430",

"config": {

"colWidth": 12.0,

"fontSize": 9.0,

@@ -320,9 +357,9 @@

"status": "READY"

}

],

- "name": "Streaming ETL",

+ "name": "4. Streaming ETL",

"id": "2EYD56B9B",

- "defaultInterpreterGroup": "spark",

+ "defaultInterpreterGroup": "flink",

"version": "0.9.0-SNAPSHOT",

"noteParams": {},

"noteForms": {},

diff --git a/notebook/Flink Tutorial/5. Streaming Data Analytics_2EYT7Q6R8.zpln

b/notebook/Flink Tutorial/5. Streaming Data Analytics_2EYT7Q6R8.zpln

new file mode 100644

index 0000000..e14406c

--- /dev/null

+++ b/notebook/Flink Tutorial/5. Streaming Data Analytics_2EYT7Q6R8.zpln

@@ -0,0 +1,303 @@

+{

+ "paragraphs": [

+ {

+ "title": "Overview",

+ "text": "%md\n\nThis tutorial demonstrate how to use Flink do streaming

analytics via its streaming sql + udf. Zeppelin now support 3 kinds of

streaming visualization.\n\n* Single - Single mode is for the case when the

result of sql statement is always one row, such as the following example\n*

Update - Update mode is suitable for the case when the output is more than one

rows, and always will be updated continuously. \n* Append - Append mode is

suitable for the scenario where ou [...]

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 15:31:49.376",

+ "config": {

+ "runOnSelectionChange": true,

+ "title": true,

+ "checkEmpty": true,

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "markdown",

+ "editOnDblClick": true,

+ "completionKey": "TAB",

+ "completionSupport": false

+ },

+ "editorMode": "ace/mode/markdown",

+ "editorHide": true,

+ "tableHide": false

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "SUCCESS",

+ "msg": [

+ {

+ "type": "HTML",

+ "data": "\u003cdiv

class\u003d\"markdown-body\"\u003e\n\u003cp\u003eThis tutorial demonstrate how

to use Flink do streaming analytics via its streaming sql + udf. Zeppelin now

support 3 kinds of streaming

visualization.\u003c/p\u003e\n\u003cul\u003e\n\u003cli\u003eSingle - Single

mode is for the case when the result of sql statement is always one row, such

as the following example\u003c/li\u003e\n\u003cli\u003eUpdate - Update mode is

suitable for the case when the output is [...]

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1579054784565_2122156822",

+ "id": "paragraph_1579054784565_2122156822",

+ "dateCreated": "2020-01-15 10:19:44.565",

+ "dateStarted": "2020-04-29 15:31:49.379",

+ "dateFinished": "2020-04-29 15:31:49.549",

+ "status": "FINISHED"

+ },

+ {

+ "title": "Single row mode of Output",

+ "text": "%flink.ssql(type\u003dsingle, parallelism\u003d1,

refreshInterval\u003d3000, template\u003d\u003ch1\u003e{1}\u003c/h1\u003e until

\u003ch2\u003e{0}\u003c/h2\u003e)\n\nselect max(event_ts), count(1) from

sink_kafka\n",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 15:45:47.116",

+ "config": {

+ "runOnSelectionChange": true,

+ "title": true,

+ "checkEmpty": true,

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {},

+ "editorSetting": {

+ "language": "sql",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/sql",

+ "template": "\u003ch1\u003e{1}\u003c/h1\u003e until

\u003ch2\u003e{0}\u003c/h2\u003e",

+ "refreshInterval": "3000",

+ "parallelism": "1",

+ "type": "single"

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "ERROR",

+ "msg": [

+ {

+ "type": "ANGULAR",

+ "data": "\u003ch1\u003e{{value_1}}\u003c/h1\u003e until

\u003ch2\u003e{{value_0}}\u003c/h2\u003e\n"

+ },

+ {

+ "type": "TEXT",

+ "data": "Fail to run sql command: select max(event_ts), count(1)

from sink_kafka\njava.io.IOException: Fail to run stream sql job\n\tat

org.apache.zeppelin.flink.sql.AbstractStreamSqlJob.run(AbstractStreamSqlJob.java:166)\n\tat

org.apache.zeppelin.flink.sql.AbstractStreamSqlJob.run(AbstractStreamSqlJob.java:101)\n\tat

org.apache.zeppelin.flink.FlinkStreamSqlInterpreter.callInnerSelect(FlinkStreamSqlInterpreter.java:74)\n\tat

org.apache.zeppelin.flink.FlinkSqlInterrpeter.callS [...]

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1578909960516_-1812187661",

+ "id": "paragraph_1578909960516_-1812187661",

+ "dateCreated": "2020-01-13 18:06:00.516",

+ "dateStarted": "2020-04-29 15:45:47.127",

+ "dateFinished": "2020-04-29 15:47:14.738",

+ "status": "ABORT"

+ },

+ {

+ "title": "Update mode of Output",

+ "text": "%flink.ssql(type\u003dupdate, refreshInterval\u003d2000,

parallelism\u003d1)\n\nselect status, count(1) as pv from sink_kafka group by

status",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 15:46:09.485",

+ "config": {

+ "runOnSelectionChange": true,

+ "title": true,

+ "checkEmpty": true,

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {

+ "0": {

+ "graph": {

+ "mode": "multiBarChart",

+ "height": 300.0,

+ "optionOpen": false,

+ "setting": {

+ "multiBarChart": {

+ "xLabelStatus": "default",

+ "rotate": {

+ "degree": "-45"

+ }

+ }

+ },

+ "commonSetting": {},

+ "keys": [

+ {

+ "name": "status",

+ "index": 0.0,

+ "aggr": "sum"

+ }

+ ],

+ "groups": [],

+ "values": [

+ {

+ "name": "pv",

+ "index": 1.0,

+ "aggr": "sum"

+ }

+ ]

+ },

+ "helium": {}

+ }

+ },

+ "editorSetting": {

+ "language": "sql",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/sql",

+ "refreshInterval": "2000",

+ "parallelism": "1",

+ "type": "update",

+ "savepointDir": "/tmp/flink_2",

+ "editorHide": false

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "ERROR",

+ "msg": [

+ {

+ "type": "TABLE",

+ "data": "status\tpv\nbar\t48\nbaz\t28\n"

+ },

+ {

+ "type": "TEXT",

+ "data": "Fail to run sql command: select status, count(1) as pv

from sink_kafka group by status\njava.io.IOException: Fail to run stream sql

job\n\tat

org.apache.zeppelin.flink.sql.AbstractStreamSqlJob.run(AbstractStreamSqlJob.java:166)\n\tat

org.apache.zeppelin.flink.sql.AbstractStreamSqlJob.run(AbstractStreamSqlJob.java:101)\n\tat

org.apache.zeppelin.flink.FlinkStreamSqlInterpreter.callInnerSelect(FlinkStreamSqlInterpreter.java:90)\n\tat

org.apache.zeppelin.flink.FlinkSqlIn [...]

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1578910004762_-286113604",

+ "id": "paragraph_1578910004762_-286113604",

+ "dateCreated": "2020-01-13 18:06:44.762",

+ "dateStarted": "2020-04-29 15:46:09.492",

+ "dateFinished": "2020-04-29 15:47:13.312",

+ "status": "ABORT"

+ },

+ {

+ "title": "Append mode of Output",

+ "text": "%flink.ssql(type\u003dappend, parallelism\u003d1,

refreshInterval\u003d2000, threshold\u003d60000)\n\nselect

TUMBLE_START(event_ts, INTERVAL \u00275\u0027 SECOND) as start_time, status,

count(1) from sink_kafka\ngroup by TUMBLE(event_ts, INTERVAL \u00275\u0027

SECOND), status\n",

+ "user": "anonymous",

+ "dateUpdated": "2020-04-29 15:46:40.174",

+ "config": {

+ "runOnSelectionChange": true,

+ "title": true,

+ "checkEmpty": true,

+ "colWidth": 12.0,

+ "fontSize": 9.0,

+ "enabled": true,

+ "results": {

+ "0": {

+ "graph": {

+ "mode": "lineChart",

+ "height": 300.0,

+ "optionOpen": false,

+ "setting": {

+ "lineChart": {

+ "xLabelStatus": "rotate",

+ "rotate": {

+ "degree": "-45"

+ }

+ }

+ },

+ "commonSetting": {},

+ "keys": [

+ {

+ "name": "start_time",

+ "index": 0.0,

+ "aggr": "sum"

+ }

+ ],

+ "groups": [

+ {

+ "name": "status",

+ "index": 1.0,

+ "aggr": "sum"

+ }

+ ],

+ "values": [

+ {

+ "name": "EXPR$2",

+ "index": 2.0,

+ "aggr": "sum"

+ }

+ ]

+ },

+ "helium": {}

+ }

+ },

+ "editorSetting": {

+ "language": "sql",

+ "editOnDblClick": false,

+ "completionKey": "TAB",

+ "completionSupport": true

+ },

+ "editorMode": "ace/mode/sql",

+ "refreshInterval": "2000",

+ "parallelism": "1",

+ "threshold": "60000",

+ "type": "append",

+ "savepointDir": "/tmp/flink_3"

+ },

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "results": {

+ "code": "ERROR",

+ "msg": [

+ {

+ "type": "TABLE",

+ "data": "start_time\tstatus\tEXPR$2\n2020-04-29

07:46:20.0\tbaz\t3\n2020-04-29 07:46:20.0\tbar\t3\n2020-04-29

07:46:25.0\tbaz\t2\n2020-04-29 07:46:25.0\tbar\t4\n2020-04-29

07:46:30.0\tbar\t4\n2020-04-29 07:46:30.0\tbaz\t2\n2020-04-29

07:46:35.0\tbar\t4\n2020-04-29 07:46:40.0\tbar\t5\n2020-04-29

07:46:40.0\tbaz\t2\n2020-04-29 07:46:45.0\tbar\t4\n2020-04-29

07:46:45.0\tbaz\t4\n2020-04-29 07:46:50.0\tbar\t4\n2020-04-29

07:46:50.0\tbaz\t3\n2020-04-29 07:46:55.0\tbaz\t3\n2020-04-2 [...]

+ },

+ {

+ "type": "TEXT",

+ "data": "Fail to run sql command: select TUMBLE_START(event_ts,

INTERVAL \u00275\u0027 SECOND) as start_time, status, count(1) from

sink_kafka\ngroup by TUMBLE(event_ts, INTERVAL \u00275\u0027 SECOND),

status\njava.io.IOException: Fail to run stream sql job\n\tat

org.apache.zeppelin.flink.sql.AbstractStreamSqlJob.run(AbstractStreamSqlJob.java:166)\n\tat

org.apache.zeppelin.flink.sql.AbstractStreamSqlJob.run(AbstractStreamSqlJob.java:101)\n\tat

org.apache.zeppelin.flink.FlinkS [...]

+ }

+ ]

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1578910016872_1942851900",

+ "id": "paragraph_1578910016872_1942851900",

+ "dateCreated": "2020-01-13 18:06:56.872",

+ "dateStarted": "2020-04-29 15:46:18.958",

+ "dateFinished": "2020-04-29 15:47:22.960",

+ "status": "ABORT"

+ },

+ {

+ "text": "%flink.ssql\n",

+ "user": "anonymous",

+ "dateUpdated": "2020-01-13 21:17:35.739",

+ "config": {},

+ "settings": {

+ "params": {},

+ "forms": {}

+ },

+ "apps": [],

+ "progressUpdateIntervalMs": 500,

+ "jobName": "paragraph_1578921455738_-1465781668",

+ "id": "paragraph_1578921455738_-1465781668",

+ "dateCreated": "2020-01-13 21:17:35.739",

+ "status": "READY"

+ }

+ ],

+ "name": "5. Streaming Data Analytics",

+ "id": "2EYT7Q6R8",

+ "defaultInterpreterGroup": "spark",

+ "version": "0.9.0-SNAPSHOT",

+ "noteParams": {},

+ "noteForms": {},

+ "angularObjects": {},

+ "config": {

+ "isZeppelinNotebookCronEnable": false

+ },

+ "info": {}

+}

\ No newline at end of file

diff --git a/notebook/Flink Tutorial/Batch ETL_2EW19CSPA.zpln b/notebook/Flink

Tutorial/6. Batch ETL_2EW19CSPA.zpln

similarity index 78%

rename from notebook/Flink Tutorial/Batch ETL_2EW19CSPA.zpln

rename to notebook/Flink Tutorial/6. Batch ETL_2EW19CSPA.zpln

index 27b4f3a..d558a76 100644

--- a/notebook/Flink Tutorial/Batch ETL_2EW19CSPA.zpln

+++ b/notebook/Flink Tutorial/6. Batch ETL_2EW19CSPA.zpln

@@ -4,7 +4,7 @@

"title": "Overview",

"text": "%md\n\nThis tutorial demonstrates how to use Flink do batch ETL

via its batch sql + udf (scala, python \u0026 hive). Here\u0027s what we do in

this tutorial\n\n* Download

[bank](https://archive.ics.uci.edu/ml/datasets/bank+marketing) data via shell

interpreter to local\n* Process the raw data via flink batch sql \u0026 scala

udf which parse and clean the raw data\n* Write the structured and cleaned data

to another flink table via sql\n",

"user": "anonymous",

- "dateUpdated": "2020-02-03 11:22:34.799",

+ "dateUpdated": "2020-04-29 16:08:52.383",

"config": {

"runOnSelectionChange": true,

"title": true,

@@ -14,13 +14,14 @@

"enabled": true,

"results": {},

"editorSetting": {

- "language": "text",

- "editOnDblClick": false,

+ "language": "markdown",

+ "editOnDblClick": true,

"completionKey": "TAB",

- "completionSupport": true

+ "completionSupport": false

},

- "editorMode": "ace/mode/text",

- "editorHide": true

+ "editorMode": "ace/mode/markdown",

+ "editorHide": true,

+ "tableHide": false

},

"settings": {

"params": {},

@@ -40,15 +41,15 @@

"jobName": "paragraph_1579052523153_721650872",

"id": "paragraph_1579052523153_721650872",

"dateCreated": "2020-01-15 09:42:03.156",

- "dateStarted": "2020-02-03 11:22:34.823",

- "dateFinished": "2020-02-03 11:22:34.841",

+ "dateStarted": "2020-04-27 11:41:03.630",

+ "dateFinished": "2020-04-27 11:41:04.735",

"status": "FINISHED"

},

{

"title": "Download bank data",

- "text": "%sh\n\ncd /tmp\nwget

https://archive.ics.uci.edu/ml/machine-learning-databases/00222/bank.zip\ntar

-xvf bank.zip\n# upload data to hdfs if you want to run it in yarn mode\n#

hadoop fs -put /tmp/bank.csv /tmp/bank.csv\n",

+ "text": "%sh\n\ncd /tmp\nrm -rf bank*\nwget

https://archive.ics.uci.edu/ml/machine-learning-databases/00222/bank.zip\nunzip

bank.zip\n# upload data to hdfs if you want to run it in yarn mode\n# hadoop fs

-put /tmp/bank.csv /tmp/bank.csv\n",

"user": "anonymous",

- "dateUpdated": "2020-02-25 11:13:01.938",

+ "dateUpdated": "2020-04-27 11:37:36.288",

"config": {

"runOnSelectionChange": true,

"title": true,

@@ -74,7 +75,7 @@

"msg": [

{

"type": "TEXT",

- "data": "--2020-02-25 11:13:02--

https://archive.ics.uci.edu/ml/machine-learning-databases/00222/bank.zip\nResolving

archive.ics.uci.edu (archive.ics.uci.edu)... 128.195.10.252\nConnecting to

archive.ics.uci.edu (archive.ics.uci.edu)|128.195.10.252|:443...

connected.\nHTTP request sent, awaiting response... 200 OK\nLength: 579043

(565K) [application/x-httpd-php]\nSaving to: ‘bank.zip’\n\n 0K ..........

.......... .......... .......... .......... 8% 78.4K 7s\n 50K ... [...]

+ "data": "--2020-04-27 11:37:36--