This is an automated email from the ASF dual-hosted git repository.

dongjoon pushed a commit to branch master

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/master by this push:

new 170a8bca357 [SPARK-42207][INFRA] Update `build_and_test.yml` to use

`Ubuntu 22.04`

170a8bca357 is described below

commit 170a8bca357e3057a1c37088960de31a261608b3

Author: Dongjoon Hyun <[email protected]>

AuthorDate: Thu Jan 26 21:19:06 2023 -0800

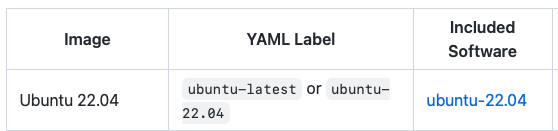

[SPARK-42207][INFRA] Update `build_and_test.yml` to use `Ubuntu 22.04`

### What changes were proposed in this pull request?

This PR aims to update all jobs of `build_and_test.yml` (except `tpcds`

job) to use `Ubuntu 22.04`.

### Why are the changes needed?

`ubuntu-latest` now points to `ubuntu-22.04`. Since branch-3.4 is already

created, we can upgrade this for Aapche Spark 3.5.0 safely.

- https://github.com/actions/runner-images

### Does this PR introduce _any_ user-facing change?

No.

### How was this patch tested?

Pass the CIs.

Closes #39762 from dongjoon-hyun/SPARK-42207.

Authored-by: Dongjoon Hyun <[email protected]>

Signed-off-by: Dongjoon Hyun <[email protected]>

---

.github/workflows/build_and_test.yml | 20 ++++++++++----------

1 file changed, 10 insertions(+), 10 deletions(-)

diff --git a/.github/workflows/build_and_test.yml

b/.github/workflows/build_and_test.yml

index e4397554303..54b3d1d19d4 100644

--- a/.github/workflows/build_and_test.yml

+++ b/.github/workflows/build_and_test.yml

@@ -51,7 +51,7 @@ on:

jobs:

precondition:

name: Check changes

- runs-on: ubuntu-20.04

+ runs-on: ubuntu-22.04

env:

GITHUB_PREV_SHA: ${{ github.event.before }}

outputs:

@@ -127,8 +127,7 @@ jobs:

name: "Build modules: ${{ matrix.modules }} ${{ matrix.comment }}"

needs: precondition

if: fromJson(needs.precondition.outputs.required).build == 'true'

- # Ubuntu 20.04 is the latest LTS. The next LTS is 22.04.

- runs-on: ubuntu-20.04

+ runs-on: ubuntu-22.04

strategy:

fail-fast: false

matrix:

@@ -319,7 +318,7 @@ jobs:

# always run if pyspark == 'true', even infra-image is skip (such as

non-master job)

if: always() && fromJson(needs.precondition.outputs.required).pyspark ==

'true'

name: "Build modules: ${{ matrix.modules }}"

- runs-on: ubuntu-20.04

+ runs-on: ubuntu-22.04

container:

image: ${{ needs.precondition.outputs.image_url }}

strategy:

@@ -428,7 +427,7 @@ jobs:

# always run if sparkr == 'true', even infra-image is skip (such as

non-master job)

if: always() && fromJson(needs.precondition.outputs.required).sparkr ==

'true'

name: "Build modules: sparkr"

- runs-on: ubuntu-20.04

+ runs-on: ubuntu-22.04

container:

image: ${{ needs.precondition.outputs.image_url }}

env:

@@ -500,7 +499,7 @@ jobs:

# always run if lint == 'true', even infra-image is skip (such as

non-master job)

if: always() && fromJson(needs.precondition.outputs.required).lint ==

'true'

name: Linters, licenses, dependencies and documentation generation

- runs-on: ubuntu-20.04

+ runs-on: ubuntu-22.04

env:

LC_ALL: C.UTF-8

LANG: C.UTF-8

@@ -636,7 +635,7 @@ jobs:

java:

- 11

- 17

- runs-on: ubuntu-20.04

+ runs-on: ubuntu-22.04

steps:

- name: Checkout Spark repository

uses: actions/checkout@v3

@@ -686,7 +685,7 @@ jobs:

needs: precondition

if: fromJson(needs.precondition.outputs.required).scala-213 == 'true'

name: Scala 2.13 build with SBT

- runs-on: ubuntu-20.04

+ runs-on: ubuntu-22.04

steps:

- name: Checkout Spark repository

uses: actions/checkout@v3

@@ -733,6 +732,7 @@ jobs:

needs: precondition

if: fromJson(needs.precondition.outputs.required).tpcds-1g == 'true'

name: Run TPC-DS queries with SF=1

+ # Pin to 'Ubuntu 20.04' due to 'databricks/tpcds-kit' compilation

runs-on: ubuntu-20.04

env:

SPARK_LOCAL_IP: localhost

@@ -831,7 +831,7 @@ jobs:

needs: precondition

if: fromJson(needs.precondition.outputs.required).docker-integration-tests

== 'true'

name: Run Docker integration tests

- runs-on: ubuntu-20.04

+ runs-on: ubuntu-22.04

env:

HADOOP_PROFILE: ${{ inputs.hadoop }}

HIVE_PROFILE: hive2.3

@@ -896,7 +896,7 @@ jobs:

needs: precondition

if: fromJson(needs.precondition.outputs.required).k8s-integration-tests ==

'true'

name: Run Spark on Kubernetes Integration test

- runs-on: ubuntu-20.04

+ runs-on: ubuntu-22.04

steps:

- name: Checkout Spark repository

uses: actions/checkout@v3

---------------------------------------------------------------------

To unsubscribe, e-mail: [email protected]

For additional commands, e-mail: [email protected]