This is an automated email from the ASF dual-hosted git repository.

wenchen pushed a commit to branch branch-3.0

in repository https://gitbox.apache.org/repos/asf/spark.git

The following commit(s) were added to refs/heads/branch-3.0 by this push:

new 1421af8 [SPARK-31189][SQL][DOCS] Fix errors and missing parts for

datetime pattern document

1421af8 is described below

commit 1421af82a429e324e40caf18c70daf687c5ad674

Author: Kent Yao <[email protected]>

AuthorDate: Fri Mar 20 21:59:26 2020 +0800

[SPARK-31189][SQL][DOCS] Fix errors and missing parts for datetime pattern

document

### What changes were proposed in this pull request?

Fix errors and missing parts for datetime pattern document

1. The pattern we use is similar to DateTimeFormatter and SimpleDateFormat

but not identical. So we shouldn't use any of them in the API docs but use a

link to the doc of our own.

2. Some pattern letters are missing

3. Some pattern letters are explicitly banned - Set('A', 'c', 'e', 'n', 'N')

4. the second fraction pattern different logic for parsing and formatting

### Why are the changes needed?

fix and improve doc

### Does this PR introduce any user-facing change?

yes, new and updated doc

### How was this patch tested?

pass Jenkins

viewed locally with `jekyll serve`

Closes #27956 from yaooqinn/SPARK-31189.

Authored-by: Kent Yao <[email protected]>

Signed-off-by: Wenchen Fan <[email protected]>

(cherry picked from commit 88ae6c44816bf6882ec3cc69dc12713a1ed6f89b)

Signed-off-by: Wenchen Fan <[email protected]>

---

docs/sql-ref-datetime-pattern.md | 48 +++++++++++++++++++---

python/pyspark/sql/functions.py | 13 +++---

python/pyspark/sql/readwriter.py | 37 +++++++++--------

python/pyspark/sql/streaming.py | 17 ++++----

.../catalyst/expressions/datetimeExpressions.scala | 24 +++++++----

.../catalyst/util/DateTimeFormatterHelper.scala | 4 +-

.../util/DateTimeFormatterHelperSuite.scala | 10 ++---

.../org/apache/spark/sql/DataFrameReader.scala | 16 ++++++--

.../org/apache/spark/sql/DataFrameWriter.scala | 16 ++++++--

.../scala/org/apache/spark/sql/functions.scala | 20 ++++++---

.../spark/sql/streaming/DataStreamReader.scala | 16 ++++++--

11 files changed, 155 insertions(+), 66 deletions(-)

diff --git a/docs/sql-ref-datetime-pattern.md b/docs/sql-ref-datetime-pattern.md

index f5c20ea..80585a4 100644

--- a/docs/sql-ref-datetime-pattern.md

+++ b/docs/sql-ref-datetime-pattern.md

@@ -21,11 +21,13 @@ license: |

There are several common scenarios for datetime usage in Spark:

-- CSV/JSON datasources use the pattern string for parsing and formatting time

content.

+- CSV/JSON datasources use the pattern string for parsing and formatting

datetime content.

-- Datetime functions related to convert string to/from `DateType` or

`TimestampType`. For example, unix_timestamp, date_format, to_unix_timestamp,

from_unixtime, to_date, to_timestamp, from_utc_timestamp, to_utc_timestamp, etc.

+- Datetime functions related to convert `StringType` to/from `DateType` or

`TimestampType`.

+ For example, `unix_timestamp`, `date_format`, `to_unix_timestamp`,

`from_unixtime`, `to_date`, `to_timestamp`, `from_utc_timestamp`,

`to_utc_timestamp`, etc.

+

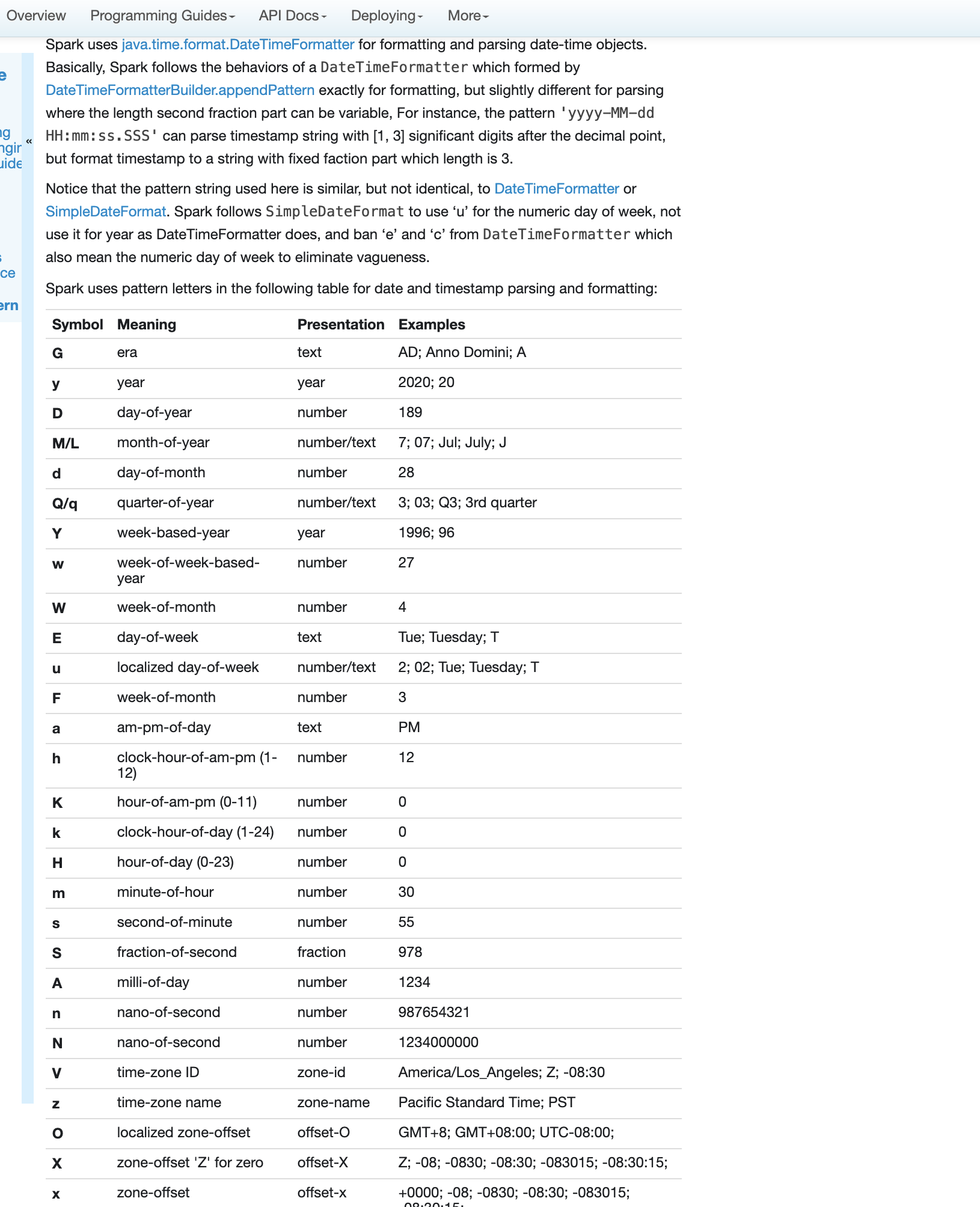

+Spark uses pattern letters in the following table for date and timestamp

parsing and formatting:

-Spark uses the below letters in date and timestamp parsing and formatting:

<table class="table">

<tr>

<th> <b>Symbol</b> </th>

@@ -52,7 +54,7 @@ Spark uses the below letters in date and timestamp parsing

and formatting:

<td> 189 </td>

</tr>

<tr>

- <td> <b>M</b> </td>

+ <td> <b>M/L</b> </td>

<td> month-of-year </td>

<td> number/text </td>

<td> 7; 07; Jul; July; J </td>

@@ -64,6 +66,12 @@ Spark uses the below letters in date and timestamp parsing

and formatting:

<td> 28 </td>

</tr>

<tr>

+ <td> <b>Q/q</b> </td>

+ <td> quarter-of-year </td>

+ <td> number/text </td>

+ <td> 3; 03; Q3; 3rd quarter </td>

+</tr>

+<tr>

<td> <b>Y</b> </td>

<td> week-based-year </td>

<td> year </td>

@@ -148,6 +156,12 @@ Spark uses the below letters in date and timestamp parsing

and formatting:

<td> 978 </td>

</tr>

<tr>

+ <td> <b>V</b> </td>

+ <td> time-zone ID </td>

+ <td> zone-id </td>

+ <td> America/Los_Angeles; Z; -08:30 </td>

+</tr>

+<tr>

<td> <b>z</b> </td>

<td> time-zone name </td>

<td> zone-name </td>

@@ -189,6 +203,18 @@ Spark uses the below letters in date and timestamp parsing

and formatting:

<td> literal </td>

<td> ' </td>

</tr>

+<tr>

+ <td> <b>[</b> </td>

+ <td> optional section start </td>

+ <td> </td>

+ <td> </td>

+</tr>

+<tr>

+ <td> <b>]</b> </td>

+ <td> optional section end </td>

+ <td> </td>

+ <td> </td>

+</tr>

</table>

The count of pattern letters determines the format.

@@ -199,11 +225,16 @@ The count of pattern letters determines the format.

- Number/Text: If the count of pattern letters is 3 or greater, use the Text

rules above. Otherwise use the Number rules above.

-- Fraction: Outputs the micro-of-second field as a fraction-of-second. The

micro-of-second value has six digits, thus the count of pattern letters is from

1 to 6. If it is less than 6, then the micro-of-second value is truncated, with

only the most significant digits being output.

+- Fraction: Use one or more (up to 9) contiguous `'S'` characters, e,g

`SSSSSS`, to parse and format fraction of second.

+ For parsing, the acceptable fraction length can be [1, the number of

contiguous 'S'].

+ For formatting, the fraction length would be padded to the number of

contiguous 'S' with zeros.

+ Spark supports datetime of micro-of-second precision, which has up to 6

significant digits, but can parse nano-of-second with exceeded part truncated.

- Year: The count of letters determines the minimum field width below which

padding is used. If the count of letters is two, then a reduced two digit form

is used. For printing, this outputs the rightmost two digits. For parsing, this

will parse using the base value of 2000, resulting in a year within the range

2000 to 2099 inclusive. If the count of letters is less than four (but not

two), then the sign is only output for negative years. Otherwise, the sign is

output if the pad width is [...]

-- Zone names: This outputs the display name of the time-zone ID. If the count

of letters is one, two or three, then the short name is output. If the count of

letters is four, then the full name is output. Five or more letters will fail.

+- Zone ID(V): This outputs the display the time-zone ID. Pattern letter count

must be 2.

+

+- Zone names(z): This outputs the display textual name of the time-zone ID. If

the count of letters is one, two or three, then the short name is output. If

the count of letters is four, then the full name is output. Five or more

letters will fail.

- Offset X and x: This formats the offset based on the number of pattern

letters. One letter outputs just the hour, such as '+01', unless the minute is

non-zero in which case the minute is also output, such as '+0130'. Two letters

outputs the hour and minute, without a colon, such as '+0130'. Three letters

outputs the hour and minute, with a colon, such as '+01:30'. Four letters

outputs the hour and minute and optional second, without a colon, such as

'+013015'. Five letters outputs the [...]

@@ -211,6 +242,11 @@ The count of pattern letters determines the format.

- Offset Z: This formats the offset based on the number of pattern letters.

One, two or three letters outputs the hour and minute, without a colon, such as

'+0130'. The output will be '+0000' when the offset is zero. Four letters

outputs the full form of localized offset, equivalent to four letters of

Offset-O. The output will be the corresponding localized offset text if the

offset is zero. Five letters outputs the hour, minute, with optional second if

non-zero, with colon. It outputs ' [...]

+- Optional section start and end: Use `[]` to define an optional section and

maybe nested.

+ During formatting, all valid data will be output even it is in the optional

section.

+ During parsing, the whole section may be missing from the parsed string.

+ An optional section is started by `[` and ended using `]` (or at the end of

the pattern).

+

More details for the text style:

- Short Form: Short text, typically an abbreviation. For example, day-of-week

Monday might output "Mon".

diff --git a/python/pyspark/sql/functions.py b/python/pyspark/sql/functions.py

index c23cc2b..bbadb54 100644

--- a/python/pyspark/sql/functions.py

+++ b/python/pyspark/sql/functions.py

@@ -920,8 +920,9 @@ def date_format(date, format):

format given by the second argument.

A pattern could be for instance `dd.MM.yyyy` and could return a string

like '18.03.1993'. All

- pattern letters of the Java class `java.time.format.DateTimeFormatter` can

be used.

+ pattern letters of `datetime pattern`_. can be used.

+ .. _datetime pattern:

https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html

.. note:: Use when ever possible specialized functions like `year`. These

benefit from a

specialized implementation.

@@ -1138,11 +1139,12 @@ def months_between(date1, date2, roundOff=True):

@since(2.2)

def to_date(col, format=None):

"""Converts a :class:`Column` into :class:`pyspark.sql.types.DateType`

- using the optionally specified format. Specify formats according to

- `DateTimeFormatter

<https://docs.oracle.com/javase/8/docs/api/java/time/format/DateTimeFormatter.html>`_.

# noqa

+ using the optionally specified format. Specify formats according to

`datetime pattern`_.

By default, it follows casting rules to

:class:`pyspark.sql.types.DateType` if the format

is omitted. Equivalent to ``col.cast("date")``.

+ .. _datetime pattern:

https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html

+

>>> df = spark.createDataFrame([('1997-02-28 10:30:00',)], ['t'])

>>> df.select(to_date(df.t).alias('date')).collect()

[Row(date=datetime.date(1997, 2, 28))]

@@ -1162,11 +1164,12 @@ def to_date(col, format=None):

@since(2.2)

def to_timestamp(col, format=None):

"""Converts a :class:`Column` into :class:`pyspark.sql.types.TimestampType`

- using the optionally specified format. Specify formats according to

- `DateTimeFormatter

<https://docs.oracle.com/javase/8/docs/api/java/time/format/DateTimeFormatter.html>`_.

# noqa

+ using the optionally specified format. Specify formats according to

`datetime pattern`_.

By default, it follows casting rules to

:class:`pyspark.sql.types.TimestampType` if the format

is omitted. Equivalent to ``col.cast("timestamp")``.

+ .. _datetime pattern:

https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html

+

>>> df = spark.createDataFrame([('1997-02-28 10:30:00',)], ['t'])

>>> df.select(to_timestamp(df.t).alias('dt')).collect()

[Row(dt=datetime.datetime(1997, 2, 28, 10, 30))]

diff --git a/python/pyspark/sql/readwriter.py b/python/pyspark/sql/readwriter.py

index 5904688..e7ecb3b 100644

--- a/python/pyspark/sql/readwriter.py

+++ b/python/pyspark/sql/readwriter.py

@@ -221,12 +221,11 @@ class DataFrameReader(OptionUtils):

it uses the value specified in

``spark.sql.columnNameOfCorruptRecord``.

:param dateFormat: sets the string that indicates a date format.

Custom date formats

- follow the formats at

``java.time.format.DateTimeFormatter``. This

- applies to date type. If None is set, it uses the

+ follow the formats at `datetime pattern`_.

+ This applies to date type. If None is set, it uses

the

default value, ``yyyy-MM-dd``.

:param timestampFormat: sets the string that indicates a timestamp

format.

- Custom date formats follow the formats at

- ``java.time.format.DateTimeFormatter``.

+ Custom date formats follow the formats at

`datetime pattern`_.

This applies to timestamp type. If None is

set, it uses the

default value,

``yyyy-MM-dd'T'HH:mm:ss.SSSXXX``.

:param multiLine: parse one record, which may span multiple lines, per

file. If None is

@@ -255,6 +254,7 @@ class DataFrameReader(OptionUtils):

disables `partition discovery`_.

.. _partition discovery:

/sql-data-sources-parquet.html#partition-discovery

+ .. _datetime pattern:

https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html

>>> df1 = spark.read.json('python/test_support/sql/people.json')

>>> df1.dtypes

@@ -430,12 +430,11 @@ class DataFrameReader(OptionUtils):

:param negativeInf: sets the string representation of a negative

infinity value. If None

is set, it uses the default value, ``Inf``.

:param dateFormat: sets the string that indicates a date format.

Custom date formats

- follow the formats at

``java.time.format.DateTimeFormatter``. This

- applies to date type. If None is set, it uses the

+ follow the formats at `datetime pattern`_.

+ This applies to date type. If None is set, it uses

the

default value, ``yyyy-MM-dd``.

:param timestampFormat: sets the string that indicates a timestamp

format.

- Custom date formats follow the formats at

- ``java.time.format.DateTimeFormatter``.

+ Custom date formats follow the formats at

`datetime pattern`_.

This applies to timestamp type. If None is

set, it uses the

default value,

``yyyy-MM-dd'T'HH:mm:ss.SSSXXX``.

:param maxColumns: defines a hard limit of how many columns a record

can have. If None is

@@ -491,6 +490,8 @@ class DataFrameReader(OptionUtils):

:param recursiveFileLookup: recursively scan a directory for files.

Using this option

disables `partition discovery`_.

+ .. _datetime pattern:

https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html

+

>>> df = spark.read.csv('python/test_support/sql/ages.csv')

>>> df.dtypes

[('_c0', 'string'), ('_c1', 'string')]

@@ -850,12 +851,11 @@ class DataFrameWriter(OptionUtils):

known case-insensitive shorten names (none, bzip2,

gzip, lz4,

snappy and deflate).

:param dateFormat: sets the string that indicates a date format.

Custom date formats

- follow the formats at

``java.time.format.DateTimeFormatter``. This

- applies to date type. If None is set, it uses the

+ follow the formats at `datetime pattern`_.

+ This applies to date type. If None is set, it uses

the

default value, ``yyyy-MM-dd``.

:param timestampFormat: sets the string that indicates a timestamp

format.

- Custom date formats follow the formats at

- ``java.time.format.DateTimeFormatter``.

+ Custom date formats follow the formats at

`datetime pattern`_.

This applies to timestamp type. If None is

set, it uses the

default value,

``yyyy-MM-dd'T'HH:mm:ss.SSSXXX``.

:param encoding: specifies encoding (charset) of saved json files. If

None is set,

@@ -865,6 +865,8 @@ class DataFrameWriter(OptionUtils):

:param ignoreNullFields: Whether to ignore null fields when generating

JSON objects.

If None is set, it uses the default value, ``true``.

+ .. _datetime pattern:

https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html

+

>>> df.write.json(os.path.join(tempfile.mkdtemp(), 'data'))

"""

self.mode(mode)

@@ -954,13 +956,12 @@ class DataFrameWriter(OptionUtils):

the default value, ``false``.

:param nullValue: sets the string representation of a null value. If

None is set, it uses

the default value, empty string.

- :param dateFormat: sets the string that indicates a date format.

Custom date formats

- follow the formats at

``java.time.format.DateTimeFormatter``. This

- applies to date type. If None is set, it uses the

+ :param dateFormat: sets the string that indicates a date format.

Custom date formats follow

+ the formats at `datetime pattern`_.

+ This applies to date type. If None is set, it uses

the

default value, ``yyyy-MM-dd``.

:param timestampFormat: sets the string that indicates a timestamp

format.

- Custom date formats follow the formats at

- ``java.time.format.DateTimeFormatter``.

+ Custom date formats follow the formats at

`datetime pattern`_.

This applies to timestamp type. If None is

set, it uses the

default value,

``yyyy-MM-dd'T'HH:mm:ss.SSSXXX``.

:param ignoreLeadingWhiteSpace: a flag indicating whether or not

leading whitespaces from

@@ -980,6 +981,8 @@ class DataFrameWriter(OptionUtils):

:param lineSep: defines the line separator that should be used for

writing. If None is

set, it uses the default value, ``\\n``. Maximum

length is 1 character.

+ .. _datetime pattern:

https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html

+

>>> df.write.csv(os.path.join(tempfile.mkdtemp(), 'data'))

"""

self.mode(mode)

diff --git a/python/pyspark/sql/streaming.py b/python/pyspark/sql/streaming.py

index 6a7624f..a831678 100644

--- a/python/pyspark/sql/streaming.py

+++ b/python/pyspark/sql/streaming.py

@@ -459,12 +459,11 @@ class DataStreamReader(OptionUtils):

it uses the value specified in

``spark.sql.columnNameOfCorruptRecord``.

:param dateFormat: sets the string that indicates a date format.

Custom date formats

- follow the formats at

``java.time.format.DateTimeFormatter``. This

- applies to date type. If None is set, it uses the

+ follow the formats at `datetime pattern`_.

+ This applies to date type. If None is set, it uses

the

default value, ``yyyy-MM-dd``.

:param timestampFormat: sets the string that indicates a timestamp

format.

- Custom date formats follow the formats at

- ``java.time.format.DateTimeFormatter``.

+ Custom date formats follow the formats at

`datetime pattern`_.

This applies to timestamp type. If None is

set, it uses the

default value,

``yyyy-MM-dd'T'HH:mm:ss.SSSXXX``.

:param multiLine: parse one record, which may span multiple lines, per

file. If None is

@@ -491,6 +490,7 @@ class DataStreamReader(OptionUtils):

disables `partition discovery`_.

.. _partition discovery:

/sql-data-sources-parquet.html#partition-discovery

+ .. _datetime pattern:

https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html

>>> json_sdf = spark.readStream.json(tempfile.mkdtemp(), schema =

sdf_schema)

>>> json_sdf.isStreaming

@@ -671,12 +671,11 @@ class DataStreamReader(OptionUtils):

:param negativeInf: sets the string representation of a negative

infinity value. If None

is set, it uses the default value, ``Inf``.

:param dateFormat: sets the string that indicates a date format.

Custom date formats

- follow the formats at

``java.time.format.DateTimeFormatter``. This

- applies to date type. If None is set, it uses the

+ follow the formats at `datetime pattern`_.

+ This applies to date type. If None is set, it uses

the

default value, ``yyyy-MM-dd``.

:param timestampFormat: sets the string that indicates a timestamp

format.

- Custom date formats follow the formats at

- ``java.time.format.DateTimeFormatter``.

+ Custom date formats follow the formats at

`datetime pattern`_.

This applies to timestamp type. If None is

set, it uses the

default value,

``yyyy-MM-dd'T'HH:mm:ss.SSSXXX``.

:param maxColumns: defines a hard limit of how many columns a record

can have. If None is

@@ -726,6 +725,8 @@ class DataStreamReader(OptionUtils):

:param recursiveFileLookup: recursively scan a directory for files.

Using this option

disables `partition discovery`_.

+ .. _datetime pattern:

https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html

+

>>> csv_sdf = spark.readStream.csv(tempfile.mkdtemp(), schema =

sdf_schema)

>>> csv_sdf.isStreaming

True

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/datetimeExpressions.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/datetimeExpressions.scala

index d71db0d..7ca6ab1 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/datetimeExpressions.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/expressions/datetimeExpressions.scala

@@ -601,7 +601,7 @@ case class WeekOfYear(child: Expression) extends

UnaryExpression with ImplicitCa

arguments = """

Arguments:

* timestamp - A date/timestamp or string to be converted to the given

format.

- * fmt - Date/time format pattern to follow. See

`java.time.format.DateTimeFormatter` for valid date

+ * fmt - Date/time format pattern to follow. See <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>Datetime

Patterns</a> for valid date

and time format patterns.

""",

examples = """

@@ -676,13 +676,14 @@ case class DateFormatClass(left: Expression, right:

Expression, timeZoneId: Opti

* Converts time string with given pattern.

* Deterministic version of [[UnixTimestamp]], must have at least one

parameter.

*/

+// scalastyle:off line.size.limit

@ExpressionDescription(

usage = "_FUNC_(timeExp[, format]) - Returns the UNIX timestamp of the given

time.",

arguments = """

Arguments:

* timeExp - A date/timestamp or string which is returned as a UNIX

timestamp.

* format - Date/time format pattern to follow. Ignored if `timeExp` is

not a string.

- Default value is "yyyy-MM-dd HH:mm:ss". See

`java.time.format.DateTimeFormatter`

+ Default value is "yyyy-MM-dd HH:mm:ss". See <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>Datetime

Patterns</a>

for valid date and time format patterns.

""",

examples = """

@@ -691,6 +692,7 @@ case class DateFormatClass(left: Expression, right:

Expression, timeZoneId: Opti

1460098800

""",

since = "1.6.0")

+// scalastyle:on line.size.limit

case class ToUnixTimestamp(

timeExp: Expression,

format: Expression,

@@ -712,9 +714,10 @@ case class ToUnixTimestamp(

override def prettyName: String = "to_unix_timestamp"

}

+// scalastyle:off line.size.limit

/**

* Converts time string with given pattern to Unix time stamp (in seconds),

returns null if fail.

- * See

[https://docs.oracle.com/javase/8/docs/api/java/time/format/DateTimeFormatter.html].

+ * See <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>Datetime

Patterns</a>.

* Note that hive Language Manual says it returns 0 if fail, but in fact it

returns null.

* If the second parameter is missing, use "yyyy-MM-dd HH:mm:ss".

* If no parameters provided, the first parameter will be current_timestamp.

@@ -727,7 +730,7 @@ case class ToUnixTimestamp(

Arguments:

* timeExp - A date/timestamp or string. If not provided, this defaults

to current time.

* format - Date/time format pattern to follow. Ignored if `timeExp` is

not a string.

- Default value is "yyyy-MM-dd HH:mm:ss". See

`java.time.format.DateTimeFormatter`

+ Default value is "yyyy-MM-dd HH:mm:ss". See <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>

Datetime Patterns</a>

for valid date and time format patterns.

""",

examples = """

@@ -738,6 +741,7 @@ case class ToUnixTimestamp(

1460041200

""",

since = "1.5.0")

+// scalastyle:on line.size.limit

case class UnixTimestamp(timeExp: Expression, format: Expression, timeZoneId:

Option[String] = None)

extends UnixTime {

@@ -915,12 +919,13 @@ abstract class UnixTime extends ToTimestamp {

* format. If the format is missing, using format like "1970-01-01 00:00:00".

* Note that hive Language Manual says it returns 0 if fail, but in fact it

returns null.

*/

+// scalastyle:off line.size.limit

@ExpressionDescription(

usage = "_FUNC_(unix_time, format) - Returns `unix_time` in the specified

`format`.",

arguments = """

Arguments:

* unix_time - UNIX Timestamp to be converted to the provided format.

- * format - Date/time format pattern to follow. See

`java.time.format.DateTimeFormatter`

+ * format - Date/time format pattern to follow. See <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>Datetime

Patterns</a>

for valid date and time format patterns.

""",

examples = """

@@ -929,6 +934,7 @@ abstract class UnixTime extends ToTimestamp {

1969-12-31 16:00:00

""",

since = "1.5.0")

+// scalastyle:on line.size.limit

case class FromUnixTime(sec: Expression, format: Expression, timeZoneId:

Option[String] = None)

extends BinaryExpression with TimeZoneAwareExpression with

ImplicitCastInputTypes {

@@ -1449,6 +1455,7 @@ case class ToUTCTimestamp(left: Expression, right:

Expression)

/**

* Parses a column to a date based on the given format.

*/

+// scalastyle:off line.size.limit

@ExpressionDescription(

usage = """

_FUNC_(date_str[, fmt]) - Parses the `date_str` expression with the `fmt`

expression to

@@ -1458,7 +1465,7 @@ case class ToUTCTimestamp(left: Expression, right:

Expression)

arguments = """

Arguments:

* date_str - A string to be parsed to date.

- * fmt - Date format pattern to follow. See

`java.time.format.DateTimeFormatter` for valid

+ * fmt - Date format pattern to follow. See <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>Datetime

Patterns</a> for valid

date and time format patterns.

""",

examples = """

@@ -1469,6 +1476,7 @@ case class ToUTCTimestamp(left: Expression, right:

Expression)

2016-12-31

""",

since = "1.5.0")

+// scalastyle:on line.size.limit

case class ParseToDate(left: Expression, format: Option[Expression], child:

Expression)

extends RuntimeReplaceable {

@@ -1497,6 +1505,7 @@ case class ParseToDate(left: Expression, format:

Option[Expression], child: Expr

/**

* Parses a column to a timestamp based on the supplied format.

*/

+// scalastyle:off line.size.limit

@ExpressionDescription(

usage = """

_FUNC_(timestamp_str[, fmt]) - Parses the `timestamp_str` expression with

the `fmt` expression

@@ -1506,7 +1515,7 @@ case class ParseToDate(left: Expression, format:

Option[Expression], child: Expr

arguments = """

Arguments:

* timestamp_str - A string to be parsed to timestamp.

- * fmt - Timestamp format pattern to follow. See

`java.time.format.DateTimeFormatter` for valid

+ * fmt - Timestamp format pattern to follow. See <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>Datetime

Patterns</a> for valid

date and time format patterns.

""",

examples = """

@@ -1517,6 +1526,7 @@ case class ParseToDate(left: Expression, format:

Option[Expression], child: Expr

2016-12-31 00:00:00

""",

since = "2.2.0")

+// scalastyle:on line.size.limit

case class ParseToTimestamp(left: Expression, format: Option[Expression],

child: Expression)

extends RuntimeReplaceable {

diff --git

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeFormatterHelper.scala

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeFormatterHelper.scala

index 4ed618e..05ec23f 100644

---

a/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeFormatterHelper.scala

+++

b/sql/catalyst/src/main/scala/org/apache/spark/sql/catalyst/util/DateTimeFormatterHelper.scala

@@ -162,6 +162,8 @@ private object DateTimeFormatterHelper {

toFormatter(builder, TimestampFormatter.defaultLocale)

}

+ final val unsupportedLetters = Set('A', 'c', 'e', 'n', 'N', 'p')

+

/**

* In Spark 3.0, we switch to the Proleptic Gregorian calendar and use

DateTimeFormatter for

* parsing/formatting datetime values. The pattern string is incompatible

with the one defined

@@ -179,7 +181,7 @@ private object DateTimeFormatterHelper {

(pattern + " ").split("'").zipWithIndex.map {

case (patternPart, index) =>

if (index % 2 == 0) {

- for (c <- patternPart if c == 'c' || c == 'e') {

+ for (c <- patternPart if unsupportedLetters.contains(c)) {

throw new IllegalArgumentException(s"Illegal pattern character:

$c")

}

// The meaning of 'u' was day number of week in SimpleDateFormat, it

was changed to year

diff --git

a/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/util/DateTimeFormatterHelperSuite.scala

b/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/util/DateTimeFormatterHelperSuite.scala

index 811c4da..817e503 100644

---

a/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/util/DateTimeFormatterHelperSuite.scala

+++

b/sql/catalyst/src/test/scala/org/apache/spark/sql/catalyst/util/DateTimeFormatterHelperSuite.scala

@@ -18,7 +18,7 @@

package org.apache.spark.sql.catalyst.util

import org.apache.spark.SparkFunSuite

-import

org.apache.spark.sql.catalyst.util.DateTimeFormatterHelper.convertIncompatiblePattern

+import org.apache.spark.sql.catalyst.util.DateTimeFormatterHelper._

class DateTimeFormatterHelperSuite extends SparkFunSuite {

@@ -36,10 +36,10 @@ class DateTimeFormatterHelperSuite extends SparkFunSuite {

=== "uuuu-MM'u contains in quoted text'''''HH:mm:ss")

assert(convertIncompatiblePattern("yyyy-MM-dd'T'HH:mm:ss.SSSz G")

=== "yyyy-MM-dd'T'HH:mm:ss.SSSz G")

- val e1 =

intercept[IllegalArgumentException](convertIncompatiblePattern("yyyy-MM-dd eeee

G"))

- assert(e1.getMessage === "Illegal pattern character: e")

- val e2 =

intercept[IllegalArgumentException](convertIncompatiblePattern("yyyy-MM-dd cccc

G"))

- assert(e2.getMessage === "Illegal pattern character: c")

+ unsupportedLetters.foreach { l =>

+ val e =

intercept[IllegalArgumentException](convertIncompatiblePattern(s"yyyy-MM-dd $l

G"))

+ assert(e.getMessage === s"Illegal pattern character: $l")

+ }

assert(convertIncompatiblePattern("yyyy-MM-dd uuuu") === "uuuu-MM-dd eeee")

assert(convertIncompatiblePattern("yyyy-MM-dd EEEE") === "uuuu-MM-dd EEEE")

assert(convertIncompatiblePattern("yyyy-MM-dd'e'HH:mm:ss") ===

"uuuu-MM-dd'e'HH:mm:ss")

diff --git a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

index 9416126..a2a3518 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameReader.scala

@@ -391,11 +391,15 @@ class DataFrameReader private[sql](sparkSession:

SparkSession) extends Logging {

* `spark.sql.columnNameOfCorruptRecord`): allows renaming the new field

having malformed string

* created by `PERMISSIVE` mode. This overrides

`spark.sql.columnNameOfCorruptRecord`.</li>

* <li>`dateFormat` (default `yyyy-MM-dd`): sets the string that indicates a

date format.

- * Custom date formats follow the formats at

`java.time.format.DateTimeFormatter`.

+ * Custom date formats follow the formats at

+ * <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>

+ * Datetime Patterns</a>.

* This applies to date type.</li>

* <li>`timestampFormat` (default `yyyy-MM-dd'T'HH:mm:ss.SSSXXX`): sets the

string that

* indicates a timestamp format. Custom date formats follow the formats at

- * `java.time.format.DateTimeFormatter`. This applies to timestamp type.</li>

+ * <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>

+ * Datetime Patterns</a>.

+ * This applies to timestamp type.</li>

* <li>`multiLine` (default `false`): parse one record, which may span

multiple lines,

* per file</li>

* <li>`encoding` (by default it is not set): allows to forcibly set one of

standard basic

@@ -616,11 +620,15 @@ class DataFrameReader private[sql](sparkSession:

SparkSession) extends Logging {

* <li>`negativeInf` (default `-Inf`): sets the string representation of a

negative infinity

* value.</li>

* <li>`dateFormat` (default `yyyy-MM-dd`): sets the string that indicates a

date format.

- * Custom date formats follow the formats at

`java.time.format.DateTimeFormatter`.

+ * Custom date formats follow the formats at

+ * <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>

+ * Datetime Patterns</a>.

* This applies to date type.</li>

* <li>`timestampFormat` (default `yyyy-MM-dd'T'HH:mm:ss.SSSXXX`): sets the

string that

* indicates a timestamp format. Custom date formats follow the formats at

- * `java.time.format.DateTimeFormatter`. This applies to timestamp type.</li>

+ * <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>

+ * Datetime Patterns</a>.

+ * This applies to timestamp type.</li>

* <li>`maxColumns` (default `20480`): defines a hard limit of how many

columns

* a record can have.</li>

* <li>`maxCharsPerColumn` (default `-1`): defines the maximum number of

characters allowed

diff --git a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

index 6946c1f..11feae9 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/DataFrameWriter.scala

@@ -750,11 +750,15 @@ final class DataFrameWriter[T] private[sql](ds:

Dataset[T]) {

* one of the known case-insensitive shorten names (`none`, `bzip2`, `gzip`,

`lz4`,

* `snappy` and `deflate`). </li>

* <li>`dateFormat` (default `yyyy-MM-dd`): sets the string that indicates a

date format.

- * Custom date formats follow the formats at

`java.time.format.DateTimeFormatter`.

+ * Custom date formats follow the formats at

+ * <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>

+ * Datetime Patterns</a>.

* This applies to date type.</li>

* <li>`timestampFormat` (default `yyyy-MM-dd'T'HH:mm:ss.SSSXXX`): sets the

string that

* indicates a timestamp format. Custom date formats follow the formats at

- * `java.time.format.DateTimeFormatter`. This applies to timestamp type.</li>

+ * <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>

+ * Datetime Patterns</a>.

+ * This applies to timestamp type.</li>

* <li>`encoding` (by default it is not set): specifies encoding (charset)

of saved json

* files. If it is not set, the UTF-8 charset will be used. </li>

* <li>`lineSep` (default `\n`): defines the line separator that should be

used for writing.</li>

@@ -871,11 +875,15 @@ final class DataFrameWriter[T] private[sql](ds:

Dataset[T]) {

* one of the known case-insensitive shorten names (`none`, `bzip2`, `gzip`,

`lz4`,

* `snappy` and `deflate`). </li>

* <li>`dateFormat` (default `yyyy-MM-dd`): sets the string that indicates a

date format.

- * Custom date formats follow the formats at

`java.time.format.DateTimeFormatter`.

+ * Custom date formats follow the formats at

+ * <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>

+ * Datetime Patterns</a>.

* This applies to date type.</li>

* <li>`timestampFormat` (default `yyyy-MM-dd'T'HH:mm:ss.SSSXXX`): sets the

string that

* indicates a timestamp format. Custom date formats follow the formats at

- * `java.time.format.DateTimeFormatter`. This applies to timestamp type.</li>

+ * <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>

+ * Datetime Patterns</a>.

+ * This applies to timestamp type.</li>

* <li>`ignoreLeadingWhiteSpace` (default `true`): a flag indicating whether

or not leading

* whitespaces from values being written should be skipped.</li>

* <li>`ignoreTrailingWhiteSpace` (default `true`): a flag indicating

defines whether or not

diff --git a/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

b/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

index 69383d4..11c04e5 100644

--- a/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

+++ b/sql/core/src/main/scala/org/apache/spark/sql/functions.scala

@@ -2632,7 +2632,9 @@ object functions {

* Converts a date/timestamp/string to a value of string in the format

specified by the date

* format given by the second argument.

*

- * See [[java.time.format.DateTimeFormatter]] for valid date and time format

patterns

+ * See <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>

+ * Datetime Patterns</a>

+ * for valid date and time format patterns

*

* @param dateExpr A date, timestamp or string. If a string, the data must

be in a format that

* can be cast to a timestamp, such as `yyyy-MM-dd` or

`yyyy-MM-dd HH:mm:ss.SSSS`

@@ -2890,7 +2892,9 @@ object functions {

* representing the timestamp of that moment in the current system time zone

in the given

* format.

*

- * See [[java.time.format.DateTimeFormatter]] for valid date and time format

patterns

+ * See <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>

+ * Datetime Patterns</a>

+ * for valid date and time format patterns

*

* @param ut A number of a type that is castable to a long, such as string

or integer. Can be

* negative for timestamps before the unix epoch

@@ -2934,7 +2938,9 @@ object functions {

/**

* Converts time string with given pattern to Unix timestamp (in seconds).

*

- * See [[java.time.format.DateTimeFormatter]] for valid date and time format

patterns

+ * See <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>

+ * Datetime Patterns</a>

+ * for valid date and time format patterns

*

* @param s A date, timestamp or string. If a string, the data must be in a

format that can be

* cast to a date, such as `yyyy-MM-dd` or `yyyy-MM-dd

HH:mm:ss.SSSS`

@@ -2962,7 +2968,9 @@ object functions {

/**

* Converts time string with the given pattern to timestamp.

*

- * See [[java.time.format.DateTimeFormatter]] for valid date and time format

patterns

+ * See <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>

+ * Datetime Patterns</a>

+ * for valid date and time format patterns

*

* @param s A date, timestamp or string. If a string, the data must be in

a format that can be

* cast to a timestamp, such as `yyyy-MM-dd` or `yyyy-MM-dd

HH:mm:ss.SSSS`

@@ -2987,7 +2995,9 @@ object functions {

/**

* Converts the column into a `DateType` with a specified format

*

- * See [[java.time.format.DateTimeFormatter]] for valid date and time format

patterns

+ * See <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>

+ * Datetime Patterns</a>

+ * for valid date and time format patterns

*

* @param e A date, timestamp or string. If a string, the data must be in

a format that can be

* cast to a date, such as `yyyy-MM-dd` or `yyyy-MM-dd

HH:mm:ss.SSSS`

diff --git

a/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamReader.scala

b/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamReader.scala

index 6848be1..be7f021 100644

---

a/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamReader.scala

+++

b/sql/core/src/main/scala/org/apache/spark/sql/streaming/DataStreamReader.scala

@@ -253,11 +253,15 @@ final class DataStreamReader private[sql](sparkSession:

SparkSession) extends Lo

* `spark.sql.columnNameOfCorruptRecord`): allows renaming the new field

having malformed string

* created by `PERMISSIVE` mode. This overrides

`spark.sql.columnNameOfCorruptRecord`.</li>

* <li>`dateFormat` (default `yyyy-MM-dd`): sets the string that indicates a

date format.

- * Custom date formats follow the formats at

`java.time.format.DateTimeFormatter`.

+ * Custom date formats follow the formats at

+ * <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>

+ * Datetime Patterns</a>.

* This applies to date type.</li>

* <li>`timestampFormat` (default `yyyy-MM-dd'T'HH:mm:ss.SSSXXX`): sets the

string that

* indicates a timestamp format. Custom date formats follow the formats at

- * `java.time.format.DateTimeFormatter`. This applies to timestamp type.</li>

+ * <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>

+ * Datetime Patterns</a>.

+ * This applies to timestamp type.</li>

* <li>`multiLine` (default `false`): parse one record, which may span

multiple lines,

* per file</li>

* <li>`lineSep` (default covers all `\r`, `\r\n` and `\n`): defines the

line separator

@@ -319,11 +323,15 @@ final class DataStreamReader private[sql](sparkSession:

SparkSession) extends Lo

* <li>`negativeInf` (default `-Inf`): sets the string representation of a

negative infinity

* value.</li>

* <li>`dateFormat` (default `yyyy-MM-dd`): sets the string that indicates a

date format.

- * Custom date formats follow the formats at

`java.time.format.DateTimeFormatter`.

+ * Custom date formats follow the formats at

+ * <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>

+ * Datetime Patterns</a>.

* This applies to date type.</li>

* <li>`timestampFormat` (default `yyyy-MM-dd'T'HH:mm:ss.SSSXXX`): sets the

string that

* indicates a timestamp format. Custom date formats follow the formats at

- * `java.time.format.DateTimeFormatter`. This applies to timestamp type.</li>

+ * <a

href="https://spark.apache.org/docs/latest/sql-ref-datetime-pattern.html";>

+ * Datetime Patterns</a>.

+ * This applies to timestamp type.</li>

* <li>`maxColumns` (default `20480`): defines a hard limit of how many

columns

* a record can have.</li>

* <li>`maxCharsPerColumn` (default `-1`): defines the maximum number of

characters allowed

---------------------------------------------------------------------

To unsubscribe, e-mail: [email protected]

For additional commands, e-mail: [email protected]